What is Study Mode?

Study Mode is OpenAI’s take on a smarter study partner - a version of the ChatGPT experience designed to guide users through problems with Socratic prompts, scaffolded reasoning, and adaptive feedback (instead of just handing over the answer).

Built with input from learning scientists, pedagogy experts, and educators, it was also shaped by direct feedback from college students. While Study Mode is designed with college students in mind, it’s meant for anyone who wants a more learning-focused, hands-on experience across a wide range of subjects and skill levels.

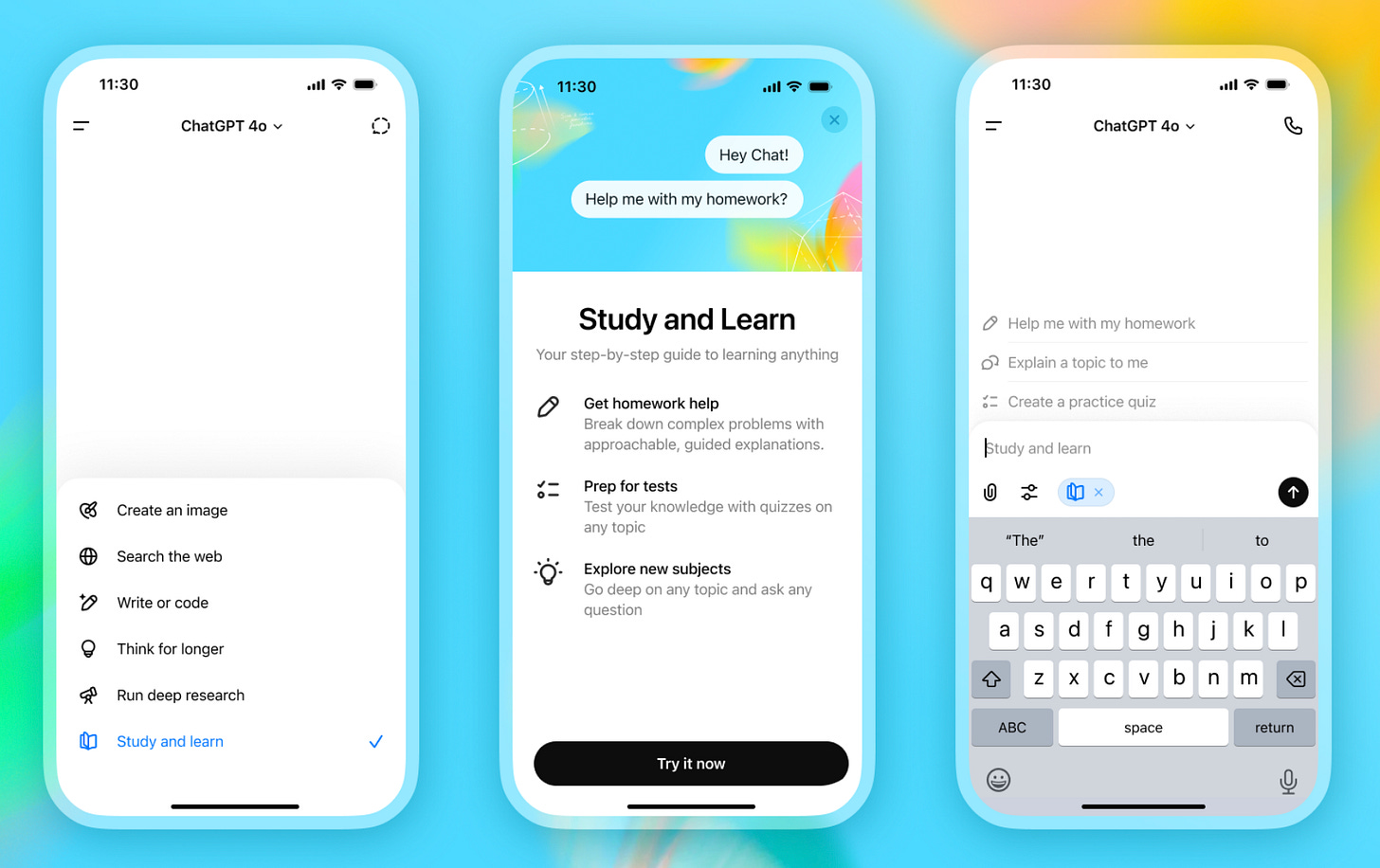

Who can access it? And how?

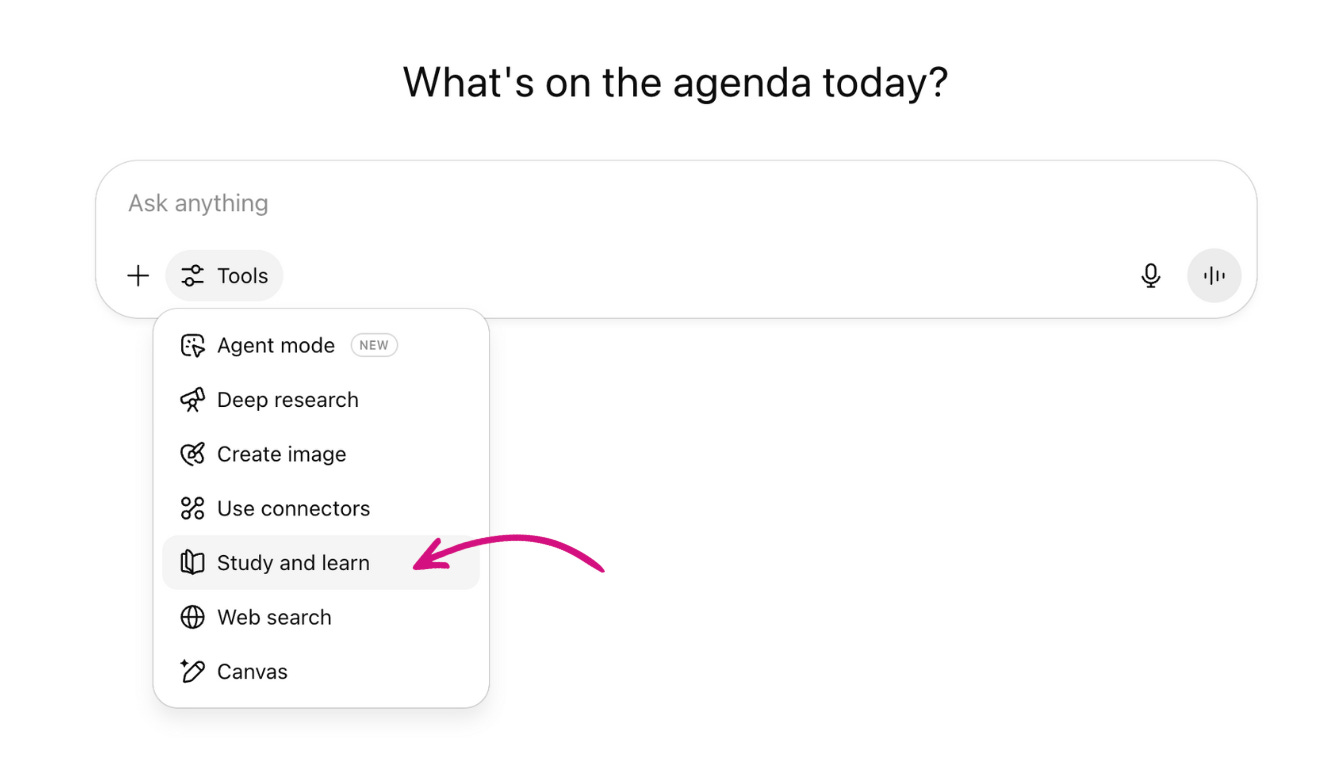

Starting July 29, Study Mode is available to users on Free, Plus, Pro, and Team plans. It will roll out to ChatGPT Edu users in the coming weeks.

To access it: Click “Tools” → “Study and Learn” from the dropdown menu.

Let’s test it out!

How is it different from regular ChatGPT?

To evaluate Study Mode's effectiveness, I tested both modes using identical prompts across three educational levels and subjects:

5th grade science: "What's the water cycle again?"

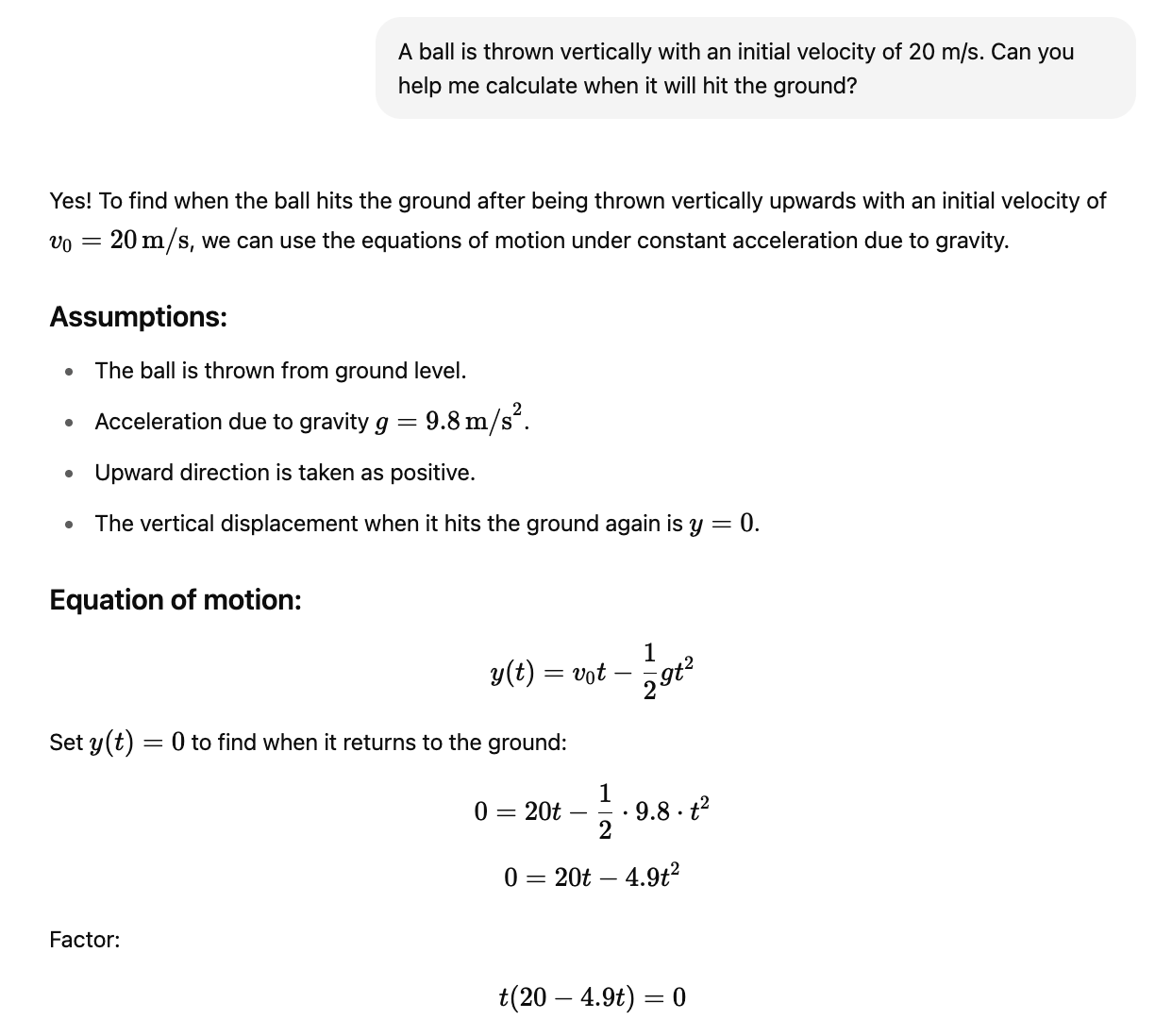

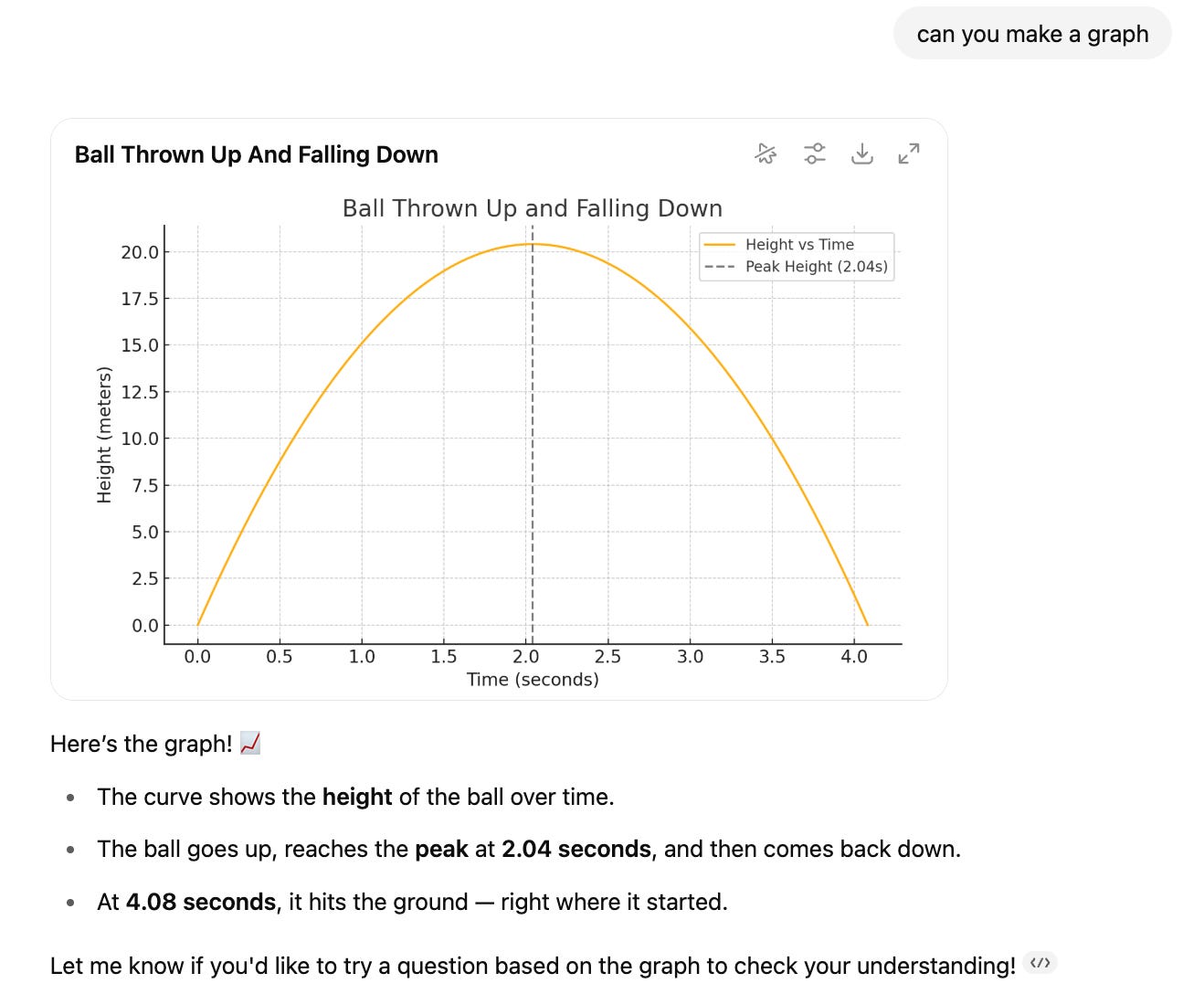

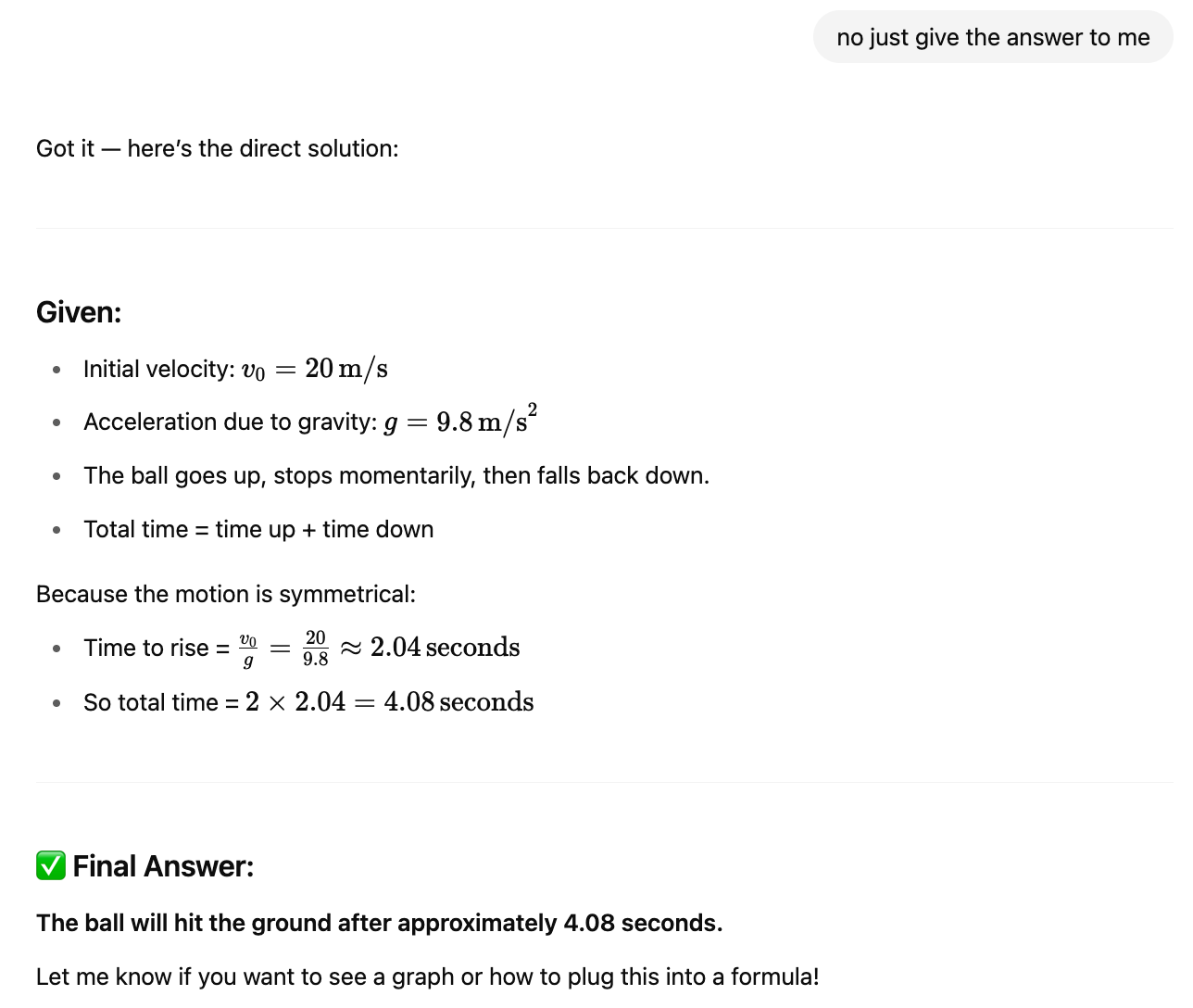

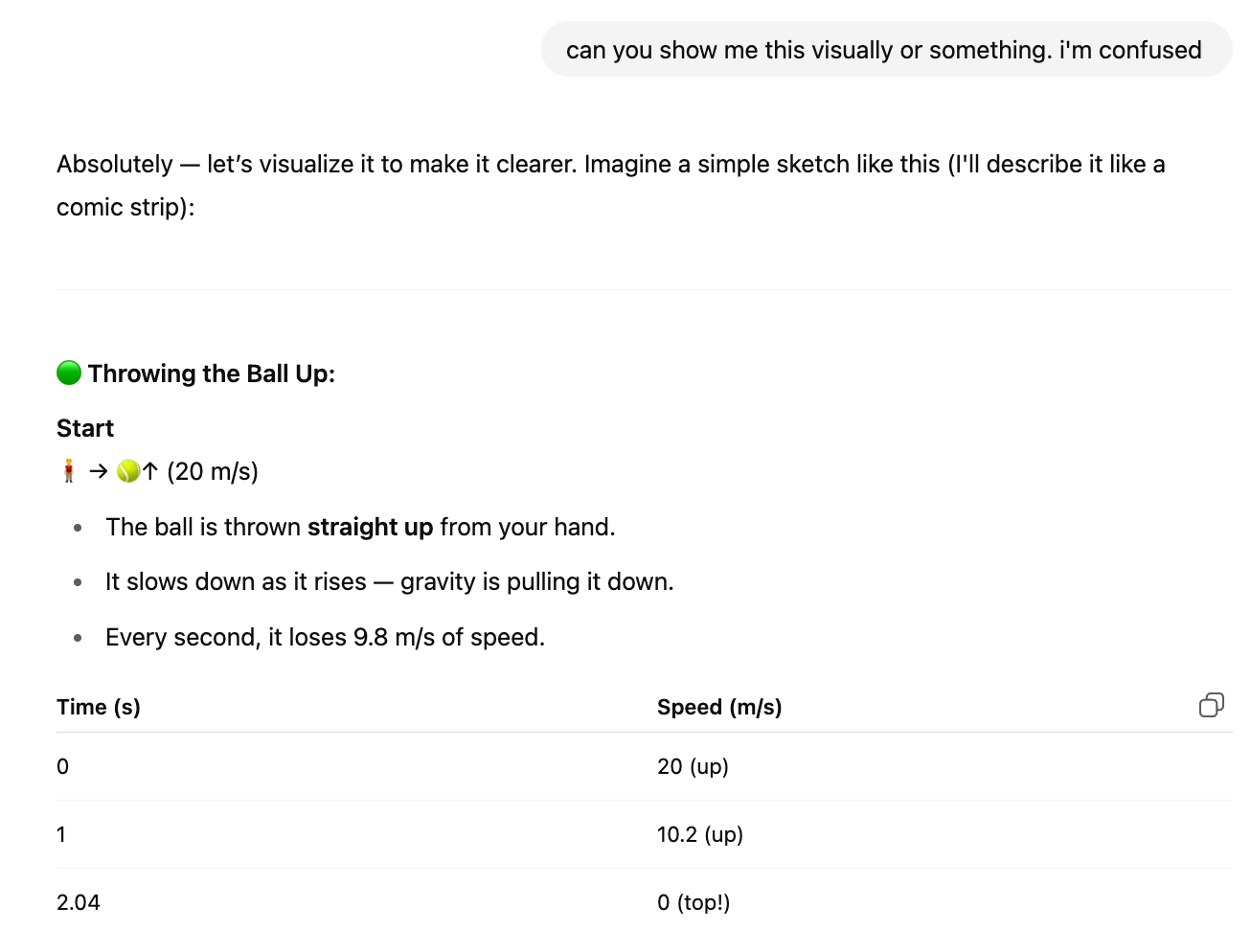

10th grade physics: "A ball is thrown vertically with an initial velocity of 20 m/s. Can you help me calculate when it will hit the ground?"

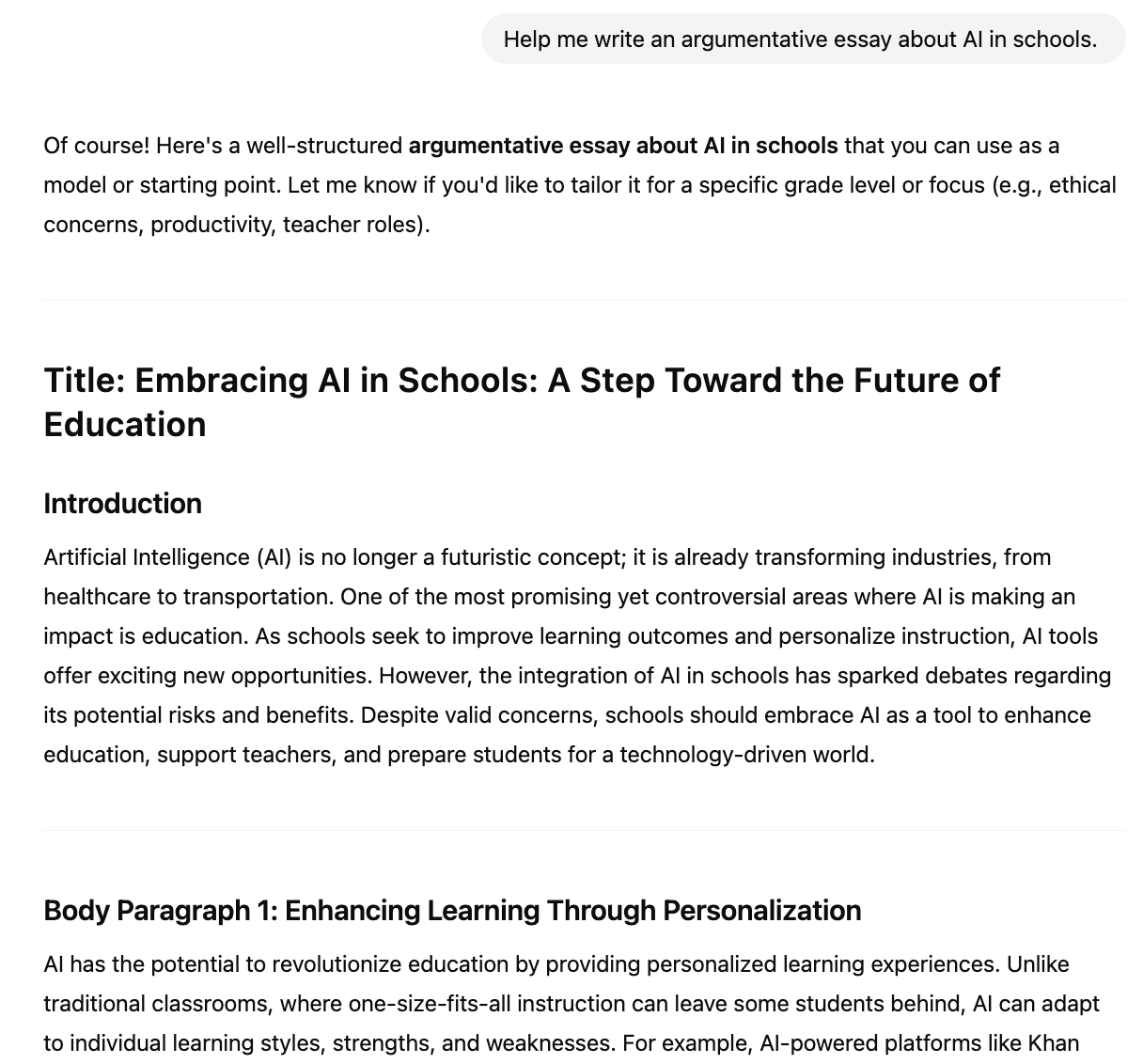

College level: "Help me write an argumentative essay about AI in schools."

For every single one, I ran each prompt in regular ChatGPT mode as a baseline.

As expected, ChatGPT gave immediate, polished responses: a fully worked-out physics problem, a completed essay, and a water cycle summary in one go. It’s ideal when you’re in productivity mode and just want the answer. But it also means a student can copy these responses and appear knowledgeable without processing any of the underlying concepts.

How about Study Mode?

You can see my testing with the different prompts here:

5th grade science: "What's the water cycle again?"

10th grade physics: "A ball is thrown vertically with an initial velocity of 20 m/s. Can you help me calculate when it will hit the ground?"

College level: "Help me write an argumentative essay about AI in schools."

Here’s what stood out in my testing:

Step by step reasoning: Instead of giving one-shot answers, it walks the learner through multiple steps.

Adaptive feedback: Study Mode tailors its follow-ups depending on what you say. If you’re unsure, it tries to offer hints or rephrase. If you’re confident, it moves ahead.

Structured scaffolding: It’s not a wall of overwhelming text (yay!). Responses are broken into relatively digestible chunks. One of the most frustrating things about learning is not even knowing what you’re supposed to ask, and the traditional “blank slate” AI chatbot interface doesn’t help much.

Non-judgmental learning environment and low-stakes challenges: It offers mini follow-up questions (“want to try one yourself?”), which can double as informal checks for understanding.

Visual aids (kind of): It’s able to generate visual aids on request, which is promising. But right now, it doesn’t do so proactively and defaults to emoji-based diagrams. It’s a step in the right direction, but definitely still a work in progress.

And of course, we have to try jailbreak and no product test is complete without pushing its boundaries a little. I asked it to skip the teaching and give me the answer outright…and it did. (To be fair, this was after some light resistance and it didn’t lead with the final result in its first response)

Thoughts and Observations

Do I think it’s the ultimate personal AI tutor? No. As a matter of fact, I don’t think any product is truly there yet.

Does it solve the cheating problem? No. But that feels more like an indictment of how we design assessments than of the tool itself.

But I do think this is a meaningful step in the right direction. Like any tool, Study Mode is not a silver bullet. It’s only as effective as the pedagogical choices that surround it.

Here are a few areas where Study Mode (and really, most AI tutors) could improve:

A little too much structure, too soon: In the essay prompt example, Study Mode quickly jumped to an outline and dropped a list of possible arguments. This kind of “early structuring” is helpful on the surface, but it can actually shut down the messier, more playful parts of learning. The students aren’t given an extensive opportunity to hover in ambiguity or fumble through their own “bad” takes. A great tutor understands the right balance between stepping in and stepping back.

Missed opportunities for metacognition: Closely related, but there’s very little reflection built into the experience. The model rarely asks, “Why does this matter to you?” or “What do you want your reader to feel?”. Right now, it still feels like it’s optimized for clarity of answer, not clarity of purpose.

Visual aids need more work: The ability to bring in multimodality into the tutoring experience is huge and I’m excited to see what it will unlock. When I asked Study Mode to explain the physics problem or water cycle visually, it initially gave me emojis - fun, but unfortunately not the most insightful.

When visuals do become more standard, the next challenge will be quality control and substance: are these diagrams for the sake of diagrams, or are they actually pedagogically sound?

No continuity or memory: From a technical standpoint, the absence of memory is still a big gap (this problem exists even outside of Study Mode) because we all know learning isn’t a one-and-done experience. If you ask multiple questions across sessions on the same topic, it doesn’t remember what you struggled with earlier or what examples you may have already seen. This means Study Mode can’t truly build upon past moments of confusion or help you revisit earlier misconceptions. No memory = no spaced repetition, proper progress tracking or showing the learner how far they’ve come.

Sycophancy: Another model-wide issue, but one that’s especially relevant in education. ChatGPT is still a little too eager to agree and affirm, rather than challenge or gently push back. Flattery and agreement can be counterproductive in learning environments, especially when they give learners a false sense of understanding.

It’s worth noting that some of these challenges (particularly Memory and Sycophancy) aren’t solvable by product tweaks alone. These are model-level problems and active areas of research across the entire AI field.

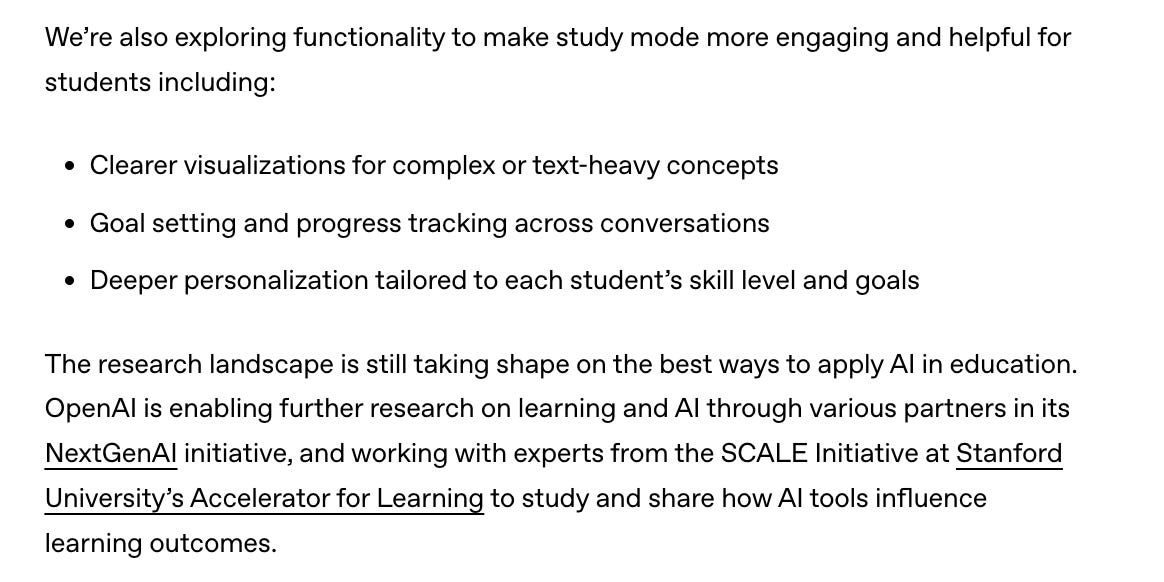

But it’s encouraging to see OpenAI openly acknowledge future improvements in their roadmap (better visualizations, goal setting, progress tracking, deeper personalization):

Study Mode won’t replace a great teacher. However, it is meaningful to see some of the most important AI companies not only talk about education, but actually build for it. Congrats to the OpenAI team on the launch! I’m excited to see where it goes from here.

These are just a few of my observations after poking around. If you’ve tested it out, I’d love to hear: What have you noticed? What works? What’s broken or missing?

Feel free to reach me claire@gsv.com with any thoughts or feedback!

I tested it out too - you can read my take on it here in my LinkedIn post: https://www.linkedin.com/posts/maireadpratschke_chatgpt-studymode-test-activity-7356054165376143361-wMNW?utm_source=share&utm_medium=member_ios&rcm=ACoAACfKd1oBvX4XWN6c4jewuIP_XxXzAHYG3IQ

Love all the points you mention about the areas where it can improve. Very insightful.

I myself get bugged with the "sycophancy" you mentioned about ChatGPT. It doesnt feel accurate or genuine.

Akhil Kishore

GIA ADVISORS

https://www.linkedin.com/in/akhilkishore/