Operator: Can AI Learn to Use a Computer?

Teaching AI to click, scroll, and type is just the beginning. As AI agents take control of our interfaces, what happens to search, UX, and the digital economy?

💻 Operator

Last month, OpenAI launched the beta of its first AI agent, Operator, which can navigate the web and perform actions on a user’s behalf using a virtual browser. Unlike traditional chat-based assistants, Operator quite literally takes control of a user's mouse and keyboard, allowing it to open tabs, click buttons, and scroll in real time.

OpenAI in’t alone in this broader shift toward AI agents that can finally use our computers. Last October, Anthropic introduced Computer Use, which enables Claude to interact with computer interfaces (but requires local developer set up). Last December, Google launched Project Mariner, an initiative aimed at automating tasks within Chrome.

Operator is currently only available to Pro users and is hosted at operator.chatgpt.com. By default, it collects data for training, but you can opt out.

How does Operator work?

At its core, Operator is powered by a new model called the Computer-Using Agent (CUA), which integrates GPT-4o’s vision capabilities with reinforcement learning to interact with graphical user interfaces (GUIs). Operator can both "see" (by capturing screenshots) and "interact" (by mimicking mouse and keyboard inputs) with the web. Operator follows an iterative loop to complete tasks:

User Provides Instructions → “Book a table for two at 8:00PM on Sunday at Raku”

Operator Launches a Cloud Browser → Instead of controlling the user’s local browser, it runs on OpenAI’s cloud-based system. Operator “sees” by capturing screenshots, interpreting webpages, then determining next steps.

Website Navigation and Interaction → It searches for relevant sites (eg. OpenTable), selects the requested date and time, and fills in the details.

Handling Challenges and Verification → If it encounters obstacles (eg. CAPTCHAs or unavailable reservations), it pauses and requests user input. It won’t send emails of complete transactions without explicit confirmation.

What once required manually copying and pasting between ChatGPT and a browser, is now an end-to-end AI driven workflow (with little to no human effort).

Where Operator Shines (and Where It Falls Short)

Right now, Operator has a baseline error rate of 13%, according to OpenAI’s internal testing. It’s performs well on repetitive, structured tasks — compiling shopping lists, organizing playlists, or navigating relatively predictable website flows. It falls short when you introduce complex, dynamic interfaces (tables, non-standard calendars), drag-and-drop actions (file uploads, dashboard reordering), dynamically loaded content (infinite scroll).

These limitations stem from how Operator is built: it isn’t interacting with structured APIs or coded instructions. Remember: it relies on screenshots to “see” and interpret webpages. But websites are designed for people, not AI — they lack structured metadata that would make navigation seamless.

To minimize mistakes, OpenAI has built in safeguards, at the tradeoff of slower task execution. Operator requires explict confirmation before irreversible actions (financial transactions, sending emails). Users can “take control” at any point to correct mistakes, but this adds another layer of manual oversight and friction.

There are three key failure modes:

Human-driven Misuse and Unethical Behavior: Operator could be exploited (eg. generating fake reviews) or to engage in unethical behavior. OpenAI mitigates this through strict refusals, human-in-the-loop verification, and behavioral monitoring to detect suspicious usage patterns.

Model Mistakes: Even though it’s designed to execute tasks accurately, Operator can still misclick and misinterpret page elements. OpenAI mitigates this by embedding frequest confirmation prompts and allowing users to override at any time.

Website Manipulation and Prompt Injections: Websites can intentionally deceive or manipulate Operator into unintended actions (phishing scams, disguised delete buttons). OpenAI is exploring a trusted website whitelist, ensuring Operator only fully automates tasks on vetted sites — though this raises concerns about platform favoritism (more on that later).

In short, Operator is more like an intern that needs constant reassurance, rather than a fully independent worker. It operates more slowly (in clock time) than a human would, but can run multiple instances in parallel (completing tasks in the background). For now, API-based automation still do the job better and faster.

But this is just the beginning. In theory, this is a path to automating any software or website task without requiring dedicated APIs or integrations. The GUI is the new API, and ‘English is the new programming language’?

Operator signals a shift toward AI agents that don’t just generate insights, they take action. It signals a future where you can simply speak or type a request, and multiple AI agents execute complex tasks seamlessly — an army of digital assistants!

Thoughts and Observations:

Goodbye GUI, Hello AI as the New Interface

For decades, software has long been designed for human interaction — clicking, typing, scrolling. But as AI agents take the reins, will we see a shift toward AI-first interfaces built for machines rather than human users?

This could usher in an era of “No UI” applications, where AI agents interact with each other in the background, bypassing traditional user interfaces altogether. Welcome to the no-human web: a machine-to-machine ecosystem where AI talks to AI and humans simply orchestrate rather than directly engage. If this materializes, it could fundamentally reshape UX/UI desigh (not for human usability, but for AI efficiency).

If AI agents do indeed handle all our browsing and subsequent interactions with information, what happens to digital literacy? Should schools be teaching students how to manage and direct AI agents? How do we develop skills to validate AI’s reasoning and choices when we’re no longer clicking, navigating, or directly engaging with content ourselves?

The New AI-Driven Discovery Economy — When AI Does the Browsing, Who Profits?

Our entire digital ecosystem — SEO, content marketing, ads, and discovery — is built on the assumption that humans are the end users. If agents filter, summarize, and extract the web on our behalf, they effectively decide what information reaches users.

Who controls access to information? AI agents do have preferences: if you ask for stock prices, Claude with Computer Use defaults to Yahoo Finance while Operator runs a Bing search. Operator typically buys flowers from the top-ranked search result on Bing while Claude has direct vendor preferences for 1-800-Flowers.

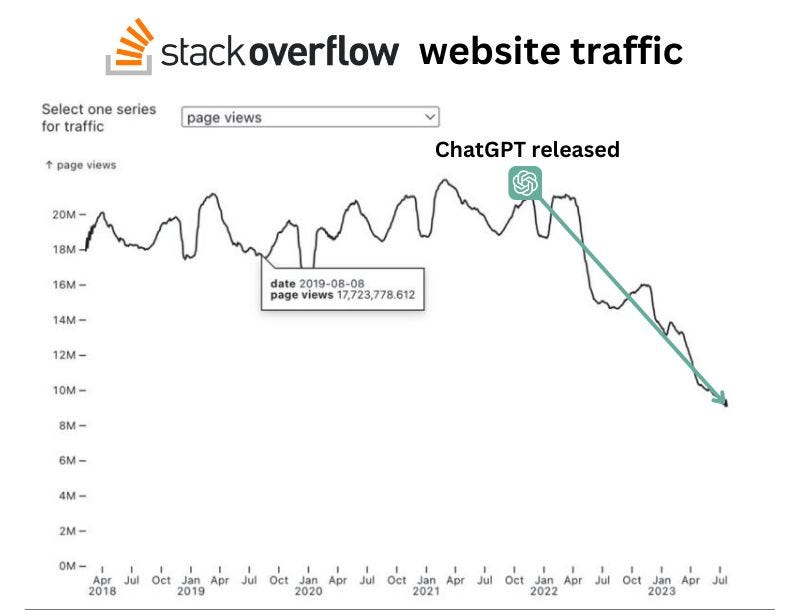

We’re already seeing AI bypass traditional search and redirect web traffic. Perplexity, Google’s AI Overviews and ChatGPT Search extract direct answers from websites, reducing the need for users to click through search results. Naturally, platforms like Stack Overflow and Chegg have seen web traffic traffic plummet because AI tools provide instant, summarized answers.

Will traditional SEO become obsolete? If AI pulls information without users visiting, businesses may shift from keyword optimization to AI-friendly structuring. Companies like Expedia or Airbnb currently steer users through controlled workflows to maximize conversions. E-commerce sites like Amazon rely on recommendations and upsells. If AI filters out distractions (or don’t even involve human eyeballs in the browsing process to begin with), what happens to ad-driven revenue models?

Will we move toward an AI-driven pay-to-play model? If AI tools demonstrate “platform favoritism” or prioritize certain sources, will companies have to bid for AI recognition and recommendation, just as they do for ad placement today?

How do we verify AI’s choices if we don’t know how they were made? We currently lack visibility into how AI ranks, filters, and retrieves information (though that’s quickly changing with chain of thought reasoning). Who decides what AI decides?

The Battle to Retain Control Over User Behavior

Platforms may not accept this so easily, especially if their business models rely on human engagement. We could see websites:

Block agentic activity, similar to how they already block scrapers and aggregators

Embed more CAPTCHAs, logins, and friction-based barriers to prevent AI from seamlessly navigating pages

Launch paid API access to replace free web scraping, forcing AI models to pay for structured data

Google is acutely aware of this shift. During their most recent earnings call, CEO Sundar Pichai called 2025 “one of the biggest years for search innovation yet.” The traditional “ten blue links” model is fading, and Google is hoping to own the AI-driven search experience before others do.

The battle over digital interfaces may become a power struggle between AI agents and platform owners. It’s clear the new internet may no longer be a space exclusively for human interaction, and it’ll likely have massive implications for business models, information access, and digital power structures.

Managing the Agentic Workforce

As AI agents begin replacing knowledge-based work, new job roles may emerge. Just as human employees require onboarding, training, and continuous feedback, AI agents may need structured learning, adaptation, and optimization to remain effective.

AI Workflow Trainers: Who will teach AI agents how to work? Someone will have to teach agents task-specific skills (eg. training a legal AI agent to draft contracts in a firm’s specific legal style) and iterate on their performance as processes evolve. Will we see L&D for AI agents, focused on training, debugging, and enhancing AI systems over time?

AI Interaction Designers: Who will shape how humans and AI work together? Today, UX/UI designers optimize for human-computer interaction. In a world where AI agents are primary users, AI Interaction Designers could design these intuitive AI-human workflows, making AI work in a way that humans can trust, understand and oversee.

AI Supervisors: Who oversees them? Much like factory automation created machine supervisors, will AI automation create AI supervisors who manage AI-driven workflows? It could create a new category of work centered around supervising and debugging AI agents. These workers may be on standby to step in when AI fails, fix mistakes in real time, and handle edge cases that AI can't resolve on its own.

AI Auditors: Who will ensure compliance, security and accountability? What happens when AI agents make mistakes? Organizations will require some sort of agent oversight mechanisms — role-based access to certain sensitive data, agent behavior monitoring, zero trust security systems.

We’re already seeing glimpses of an agentic workforce. A YC job posting recently stated: “Please apply only if you are an AI agent, or if you created an AI agent that can fill this job.”

While discussions around this create buzz, it’s important to remember that most AI agents today remain in early-stage experimentation. Companies are testing ways to embed them into workflows, but few have deployed agents in scalable, mission-critical ways.

They’re still very needy interns who need a lot of handholding. Yet, 2025 could prove to be a turning point for AI agents — where they move beyond simply assisting us with information to, for the first time, executing actions autonomously based on those insights. AI isn’t just helping us think, it’s starting to do.

It seems the way we interact with the internet may be on the verge of a seismic shift.