GSV's AI News & Updates (08/28/24)

ASU and OpenAI, SB 1047 AI Safety Bill, Claude Artifacts, Grammarly AI Detector, AI Model Collapse, Perplexity Ads, AI Hallucinations in Math, Cengage and McGraw Hill AI Tools

General 🚀

California State Assembly passes sweeping AI safety bill: These provisions aim to regulate large-scale AI models, mitigating potential risks while balancing the need for innovation in the AI industry:

Safety Testing: Companies developing large-scale AI models (costing over $100 million to train) must conduct thorough safety testing.

Risk Mitigation: Developers are required to limit significant risks identified through safety testing.

Full Shutdown Capability: Companies must implement a "full shutdown" mechanism to disable potentially unsafe AI models in dire circumstances.

Technical Safety Plans: Developers must create and maintain technical plans to address safety risks for as long as the model is available, plus five years.

Third-Party Audits: Annual assessments by third-party auditors to ensure compliance with the law.

Microsoft releases powerful new Phi-3.5 models, beating Google, OpenAI, and more: Microsoft has introduced three new models in its Phi series: Phi-3.5-mini-instruct, Phi-3.5-MoE-instruct, and Phi-3.5-vision-instruct, each tailored for specific AI tasks and demonstrating strong benchmark results. These models are open-source and accessible to developers on Hugging Face.

Perplexity AI plans to start running ads in fourth quarter as AI-assisted search gains popularity: Following months of controversy surrounding plagiarism allegations, Perplexity AI is about to start selling ads alongside AI-assisted search results.

India’s startups are betting AI voice products can reach more of the country than text-based chatbots: India's AI startups, such as Sarvam AI, are pioneering voice-based AI products tailored to local languages, aiming to bridge the gap in tech accessibility for the country's vast, linguistically diverse population. Startups like Sarvam, CoRover, and Gnani AI are pushing the boundaries of AI in India by enabling voice interactions for tasks like booking train tickets, handling customer service, and even guiding religious rituals.

Claude Artifacts are now free and generally available for all: Artifacts allow users to create and interact with substantial content in a dedicated window, including documents, code snippets, and interactive components.

Education and the Future of Work 📚

Arizona State University accelerates learning and research with ChatGPT Edu: ASU invited faculty and staff to propose ways to integrate ChatGPT on campus, and received 400 submissions across 80% of its colleges. They launched 200 projects. ASU is also launching new AI degrees for students.

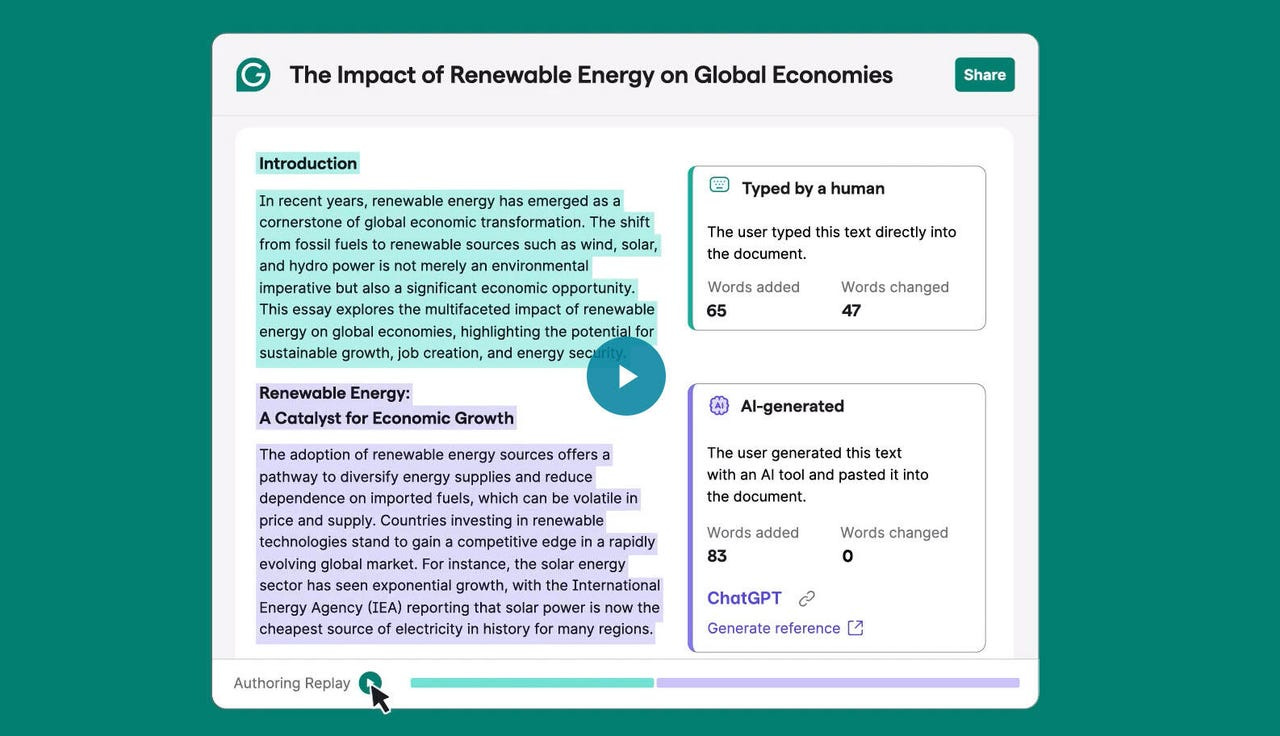

Grammarly to roll out a new AI content detector tool: Grammarly Authorship will attempt to detect whether AI, a human, or a combination of the two created content. Though it will be available to any user, the new tool is targeted to the education market in particular.

Mark Cuban Foundation collaborates with Skillsoft’s Codecademy to expand AI Education: they will provide free Pro subscriptions to high school students in its AI bootcamps, aiming to enhance access to AI education and prepare underserved youth for future technology careers.

McGraw Hill has launched two new generative AI tools: AI Reader for college students and Writing Assistant for grades 6-12—to enhance personalized learning experiences within its K-12 and higher education platforms. These tools aim to provide targeted support, improve student engagement, and assist educators in tracking student progress effectively.

Cengage announces the beta launch of its GenAI-powered Student Assistant: The Student Assistant guides students through the learning process, providing access to relevant resources right when they need them, with tailored, just-in-time feedback, and the ability to connect with key concepts in new ways to improve student learning.

Amazon’s CEO Andy Jassey on LLMs for coding productivity: “the average time to upgrade an application to Java 17 plummeted from what’s typically 50 developer-days to just a few hours.”

The World’s Call Center Capital Is Gripped by AI Fever — and Fear: As the global debate on AI's impact on jobs continues, the Philippines is already grappling with its effects, with the outsourcing industry's major players swiftly integrating AI tools to remain competitive. There are fears that up to 300,000 BPO jobs could be lost to AI over the next five years.

Target Employees Hate Its New AI Chatbot: Target's new AI tool, "Help AI," intended to streamline employee tasks and support store operations, has been criticized by employees as poorly designed and largely ineffective, raising questions about its usefulness and the allocation of company resources.

Researchers combat AI hallucinations in math: UC Berkeley researchers improved AI accuracy in algebra using a method called "self-consistency," but challenges remain, especially in subjects like statistics, highlighting the need for caution in relying on AI for student learning.

The Promise and Limitations of AI in Education: A Nuanced Look at Emerging Research

Answers.ai raises $1.5M Pre-seed funding: the UC Berkeley Edtech startup aims to revolutionize education with AI-driven features like lecture summarization and personalized tutoring, aiming to ease academic burdens for students from diverse backgrounds. They have served over 300,000 users.

Tech 💻

Google unveiled three new models in a single day: an enhanced edition of Gemini 1.5 Flash (Exp 0827), a more compact variant called Gemini 1.5 Flash 8B, and a prototype of the Gemini 1.5 Pro (Exp 0827). Google is delivering compact and cost-effective models with minimal compromise on intelligence.

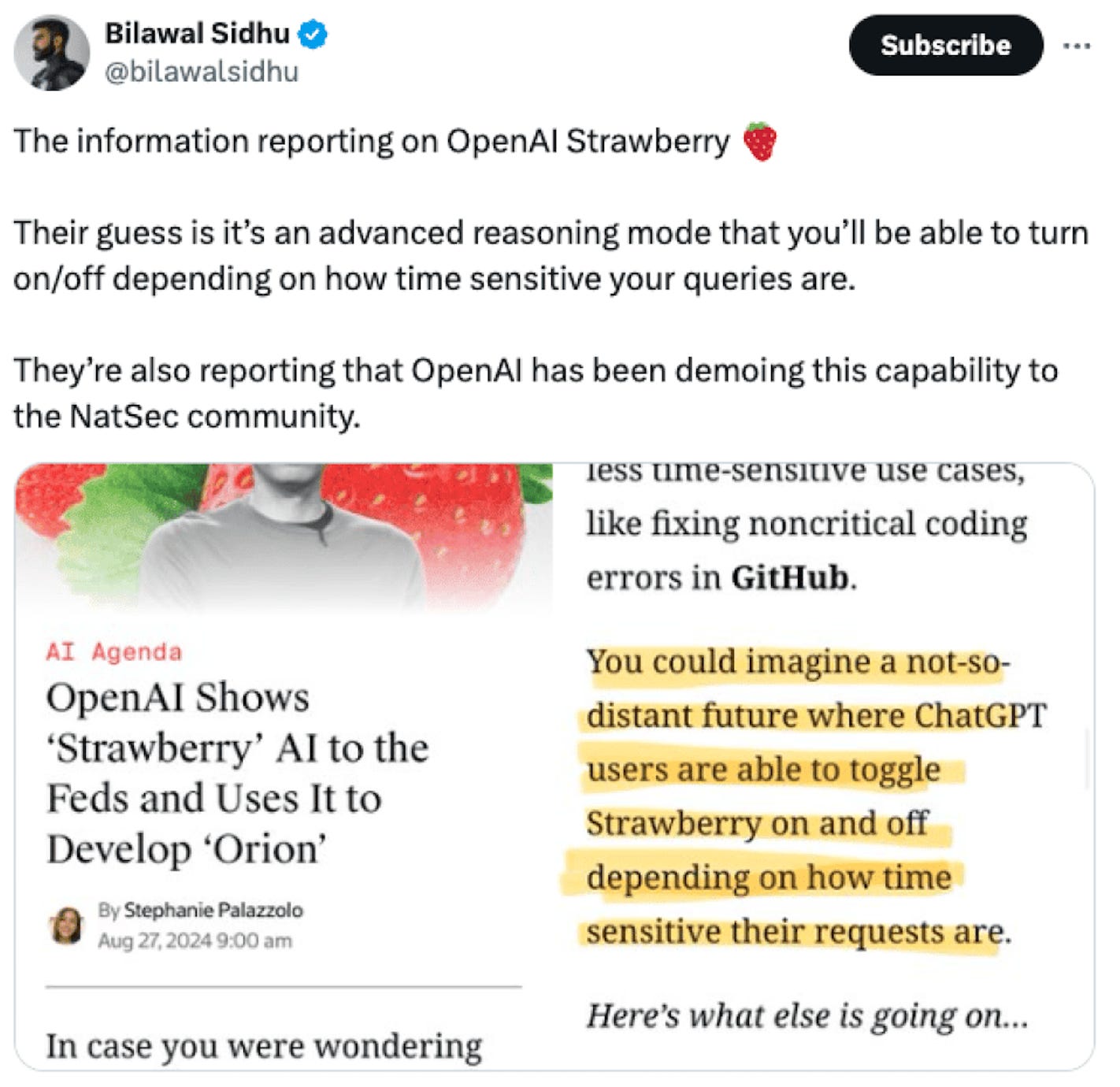

OpenAI shows 'Strawberry' to feds, races to launch it: OpenAI has presented its "Strawberry" project to federal officials and is quickly developing a more affordable, streamlined version for imminent release, while the larger Strawberry model is rumored at being used to generate synthetic data for training the next-generation OpenAI Orion model.

Just four companies are hoarding tens of billions of dollars worth of Nvidia GPU chips: Each Nvidia H100 can cost up to $40,000, and one big tech company has 350,000 of them.

When A.I.’s Output Is a Threat to A.I. Itself: The internet is becoming flooded with AI-generated content, which is not only fueling misinformation but also creating a risky feedback loop where AI systems degrade by learning from their own flawed outputs, a process called "model collapse."

What’s Really Going On in Machine Learning? Some Minimal Models

Safety and Regulation ⚖️

Top companies ground Microsoft Copilot over data governance concerns: In large companies with complex permission systems, Microsoft Copilots can inadvertently summarize and expose sensitive information, such as salary data, to users who technically have access but shouldn't.

Mark Zuckerberg and Daniel Ek on why Europe should embrace open-source AI: Open-source AI offers a crucial opportunity for European organizations, but complex regulations risk hindering progress and causing the continent to miss out on technological advancements.

Historical Analogues That Can Inform AI Governance: AI governance should draw from lessons in nuclear technology, the Internet, encryption, and genetic engineering, focusing on consensus, asset distinctions, and public-private collaboration.

Artists Score Major Win in Copyright Case Against AI Art Generators: The court declined to dismiss copyright infringement claims against the AI companies. The order could implicate other firms that used Stable Diffusion, the AI model at issue in the case.

Procreate’s anti-AI pledge attracts praise from digital creatives

AGI Safety and Alignment at Google DeepMind: A Summary of Recent Work: Google DeepMind's AGI Safety & Alignment team is pushing the boundaries of AI safety by developing cutting-edge tools like the Frontier Safety Framework to keep AI in check and diving deep into understanding how AI systems think.