GSV's AI News & Updates (08/19/24)

Gemini Live, Project Strawberry, Perplexity Campus, NVIDIA CA Community Colleges, South Korea AI Textbooks, GitLab Foundation x Ballmer Group x OpenAI, CA SB 1047

General 🚀

Google launched Gemini Live — its rival to ChatGPT Voice Mode: The mobile conversational AI has advanced voice capabilities and 10 different voices, with multimodal capabilities coming later this year.

Google just released the newest version of its AI image generator, Imagen 3: Google used extensive filtering and data labeling to minimize harmful content in datasets and reduce harmful outputs, while also adding its SynthID digital watermark to images created by Imagen 3. Safety protocols aside, Google also claims it offers greater versatility, better prompt understanding, higher image quality, and improved text rendering.

Rumors swirl about OpenAI's mysterious "Project Strawberry" – a new reasoning-focused AI?: Social media teasers and leaks hint at significant advancements, fueling speculation that this model could revolutionize AI's reasoning capabilities.

OpenAI's founding team is experiencing significant turnover: As OpenAI shifts toward a profit-driven model, team tensions and AI development challenges, including GPT-5 delays, raise questions about whether it can maintain its original vision or become a standard tech company.

xAI’s new Grok-2 chatbots bring AI image generation to X: Premium X subscribers are already using the betas to generate fake images of political figures.

Runway’s Gen-3 Turbo can now generate realistic AI videos in seconds: Users can now transform image-to-video in just 15 seconds - near real-time AI video.

Education and the Future of Work 📚

Perplexity launches Campus Strategist Program: The program offers students the opportunity to enhance the company's presence on their campuses, collaborate with Perplexity’s leadership, and gain experience in growth marketing.

California and NVIDIA Join Forces to Revolutionize AI Education in Community Colleges: California's partnership with NVIDIA aims to enhance AI education in community colleges, preparing around 100,000 students and educators for AI careers. The initiative focuses on driving economic growth and ensuring equitable access to AI resources for underserved communities.

South Korea will start using AI-infused textbooks in its “future classrooms” next year: Unlike a regular ebook, these AI-enabled digital textbooks will supposedly assess students’ reading level and change the content based on the reader. South Korea expects to be the first country in the world to use these books starting in 2025.

South Korea’s plan for AI textbooks hit by backlash from parents: Despite support from 54% of state schoolteachers, 50,000+ parents have signed a petition arguing that the government's push is premature and could harm children's cognitive development.

Is AI in Schools Promising or Overhyped? Potentially Both, New Reports Suggest: One urges educators to prep for an artificial intelligence boom. The other warns that it could all go awry. Together, they offer a reality check.

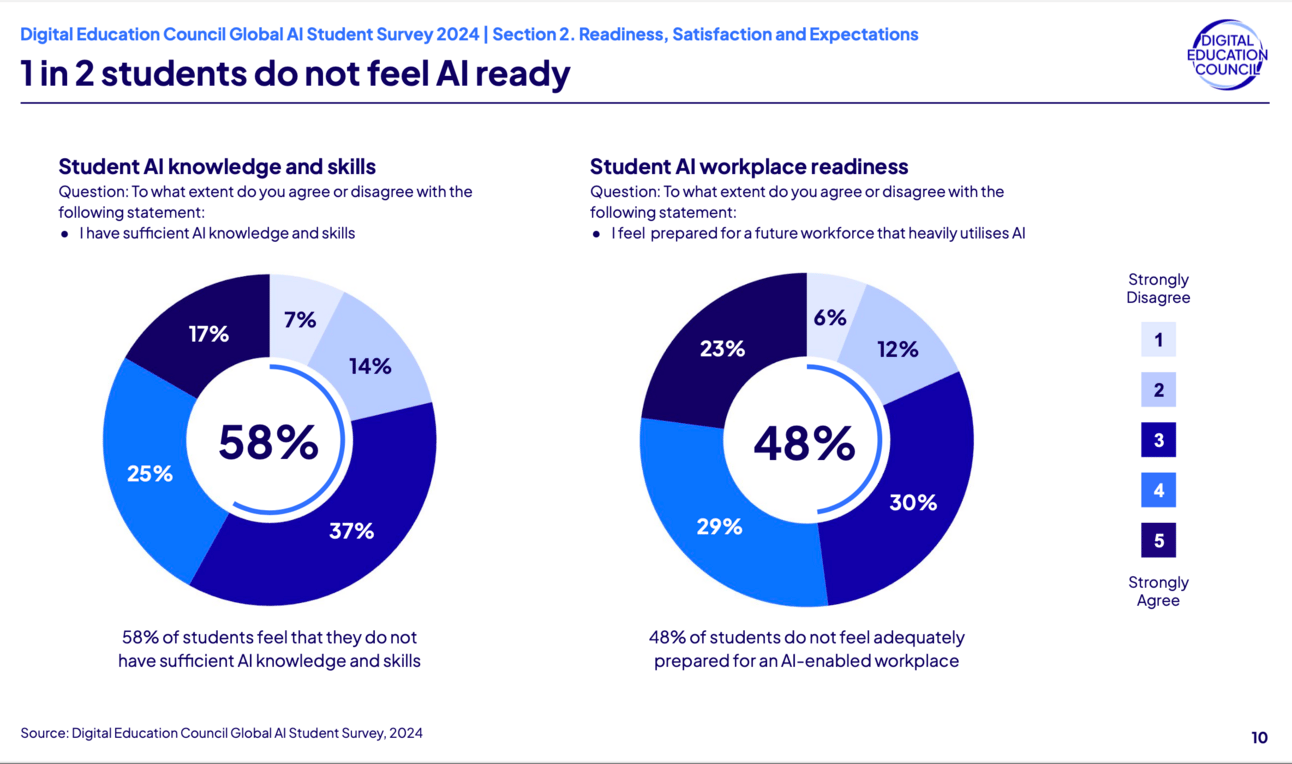

Students Worry Overemphasis on AI Could Devalue Education

55% of students believe excessive AI use in teaching devalues education, and 52% say it harms their academic performance, with only 18% viewing AI-created courses as more valuable than traditional ones.

Despite these concerns, 86% of students regularly use AI tools like ChatGPT, though 86% are unclear on their universities' AI guidelines, underscoring the need for clearer communication and better AI integration in education.

Colleges Race to Ready Students for the AI Workplace: Non-techie students are learning basic generative-AI skills as schools revamp their course offerings to be more job-friendly

S&P Global and Accenture Join Forces to Unlock Generative AI Potential: S&P Global is set to launch a comprehensive generative AI learning program for all 35,000 employees in August 2024, utilizing Accenture LearnVantage to enhance AI fluency across its workforce.

Managing in the era of gen AI: Will middle managers survive the latest bout of disruption and delayering? Should they?

8 months in: How Rolls Royce and Conagra HR teams use gen AI for talent development

GitLab Foundation, Ballmer Group, and OpenAI join to launch new fund to foster AI innovation, grow economic opportunity: The fund will consist of two phases: a "Demonstration" phase with at least $3.5 million from GitLab Foundation for project prototypes and a "Scaling" phase funded by Ballmer Group, offering grants to expand high-potential projects.

Tech 💻

Nous Research releases Hermes 3: Trained on top of Llama 3, the open-source model excels in complex tasks like role-playing, creative writing, reasoning, and decision-making.

Nvidia is creating a yet-to-be-released video foundational model: Leaked documents show Nvidia scraping ‘a human lifetime’ of videos per day to train AI.

Anthropic introduces Prompt Caching: It optimizes API usage by allowing developers to store and reuse frequently used contexts, reducing processing time and costs significantly. This feature is particularly beneficial for applications with repetitive tasks or lengthy prompts, offering cost savings of up to 90% and latency reductions of up to 85%

Sakana AI introduces the world's first fully automated AI Scientist: It is capable of generating research ideas, running experiments, analyzing data, and producing complete scientific papers at just $15 in computing resources. “Ultimately, we envision a fully AI -driven scientific ecosystem including not only LLM-driven researchers but also reviewers, area chairs and entire conferences”.

Regulation and Policy ⚖️

California's controversial SB 1047 AI bill is on the verge of becoming law: The bill aims to regulate advanced AI models (ones that cost at least $100M and use 10^26 FLOPS during training) by mandating safety measures, annual audits, and prohibiting models that pose significant risks. While critics argue it could stifle innovation, supporters believe it is crucial for preventing catastrophic AI harms.

MIT releases comprehensive database of AI risks: a comprehensive database documenting over 700 unique risks posed by AI systems, aimed at helping decision-makers in government, research, and industry assess and mitigate these risks.

Humans might need a permission slip to use the internet soon, thanks to AI: A team of researchers from OpenAI, Microsoft, MIT, and others have developed a method, “Personhood Credentials” (PHCs), for people to anonymously prove they are human. This aims to counter the potential flood of AI bots and identity theft.