A Guide to the GPT-4o 'Omni' Model

The closest thing we have to "Her" and what it means for education / workforce

Today, OpenAI introduced its new flagship model, GPT-4o, that delivers more powerful capabilities and real-time voice interactions to its users. The letter “o” in GPT-4o stands for “Omni”, referring to its enhanced multimodal capabilities. While ChatGPT has long offered a voice mode, GPT-4o is a step change in allowing users to interact with an AI assistant that can reason across voice, text, and vision in real-time.

Facilitating interaction between humans and machines (with reduced latency) represents a “small step for machine, giant leap for machine-kind” moment.

Key Updates

Learning on the Go: Instant Tutoring and Real-time Assistance

Real-time Translation

Personality and Conversational Skills: Picking Up Emotions + Interruptions!

Interpreting Audio, Text and Vision (Rock, Paper, Scissors)

Meeting Assistant

Image Generation Upgrades

Limitations

Does this pass the Turing Test?

What’s New:

Key Updates

The model is 2x faster, 50% cheaper, and has a 5x higher rate limit than GPT-4 Turbo. (Rate limit = the # of requests or queries that can be made to the model within a specified time period)

Everyone gets access to GPT-4: “the special thing about GPT-4o is it brings GPT-4 level intelligence to everyone, including our free users”, said CTO Mira Murati. Free users will also get access to custom GPTs in the GPT store, Vision and Code Interpreter. ChatGPT Plus and Team users will be able to start using GPT-4o’s text and image capabilities now

ChatGPT launched a desktop macOS app: it’s designed to integrate seamlessly into anything a user is doing on their keyboard. A PC Windows version is also in the works (notable that a Mac version is being released first given the $10B Microsoft relationship)

ChatGPT’s multimodal capabilities allow it “reason across voice, text, and vision”. It can better manage multiple voices and background noise. GPT-4o can now also use a phone’s camera to read handwritten notes and detect a person’s emotions (more on that below)

The voice version of GPT-4o will be available “in the coming weeks”

Some other significant features (and a LOT of demos incoming):

Learning on the Go: Instant Tutoring and Real-time Assistance

Sal Khan and his son tackle a math problem together, sharing their iPad screen with the new ChatGPT + GPT-4o. The AI can see what they are working on and guides him through the problem-solving process in real time.

In the EdTech sector, many of us have become familiar with the promise of an on-demand AI tutor (and have seen many startups tackle building a product around this vision). There are clear benefits to an on-demand AI tutor: learning in a shame-free and non-competitive environment, personalized learning paths, 24/7 availability, instant feedback and assessment, scalability.

Here’s what GPT-4o looks like as a real-time coding assistant and tutor with audio and vision. You can share your screen natively and have ChatGPT quite literally work alongside you as a collaborative partner.

Real-time Translation

This demo shows GPT-4o working simultaneously across languages (in this case, acting as a translator between English and Italian). What could this look like if you integrated it into AirPods? Are we a step closer to the Hitchhiker’s GTTG Babel Fish or a Star Trek Universal Translator? While there are remaining questions around accuracy and contextual errors, it’s evident this could help advance language learning and cultural understanding.

In education and the workforce, the potential applications are vast: empower educators to engage effectively with non-English speaking families and stakeholders, pave the way for smoother integration of English Language Learner (ELL) students into classrooms, allow learners to instantly access learning materials from global knowledge bases irrespective of language barriers, facilitate more international collaboration projects, enhance communication for multinational companies that operate across different language zones, provide support and services to customers in multiple languages, and more.

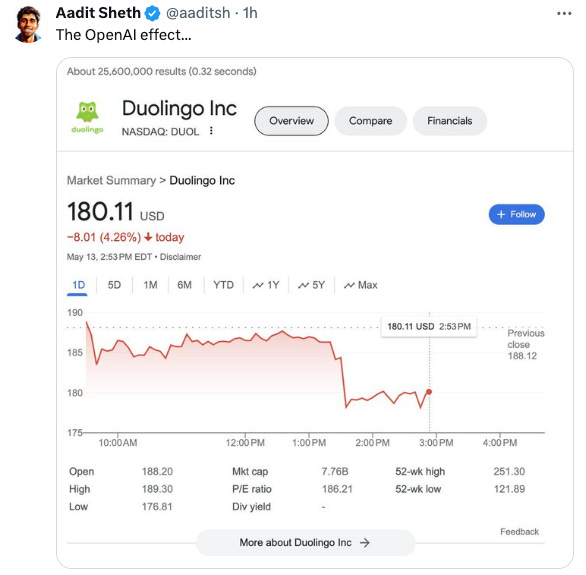

Duolingo’s stock certainly felt the “OpenAI effect” (wiping ~$340M of market cap) shortly after the livestream:

Personality and Conversational Skills: Picking Up Emotions + Interruptions!

GPT-4o can be interrupted in real-time and can adjust its emotions and response on the spot, with minimal latency. More natural conversation will be a game changer across various fields - from entertainment to video games to storytelling and literacy platforms and more.

Two GPT-4os (technically a single system) are able to harmonize with one another while adapting to new information/instructions in real time.

Interpreting Audio, Text and Vision

In this demo, users can play Rock, Paper, Scissors with GPT-4o. The low latency at which it can “play” with real players impressive in and of itself. GPT-4o takes this a step further by even visually recognizing and remembering players’ names, which it uses when announcing the winner.

Meeting Assistant

GPT-4o joins a meeting, facilitates a debate over Cats vs Dogs and efficiently summarizes the responses. It can presumably transcribe the entire meeting as well - sounds like a lot of “live meeting assistant” apps/startups are at risk of disruption.

Image Generation Upgrades

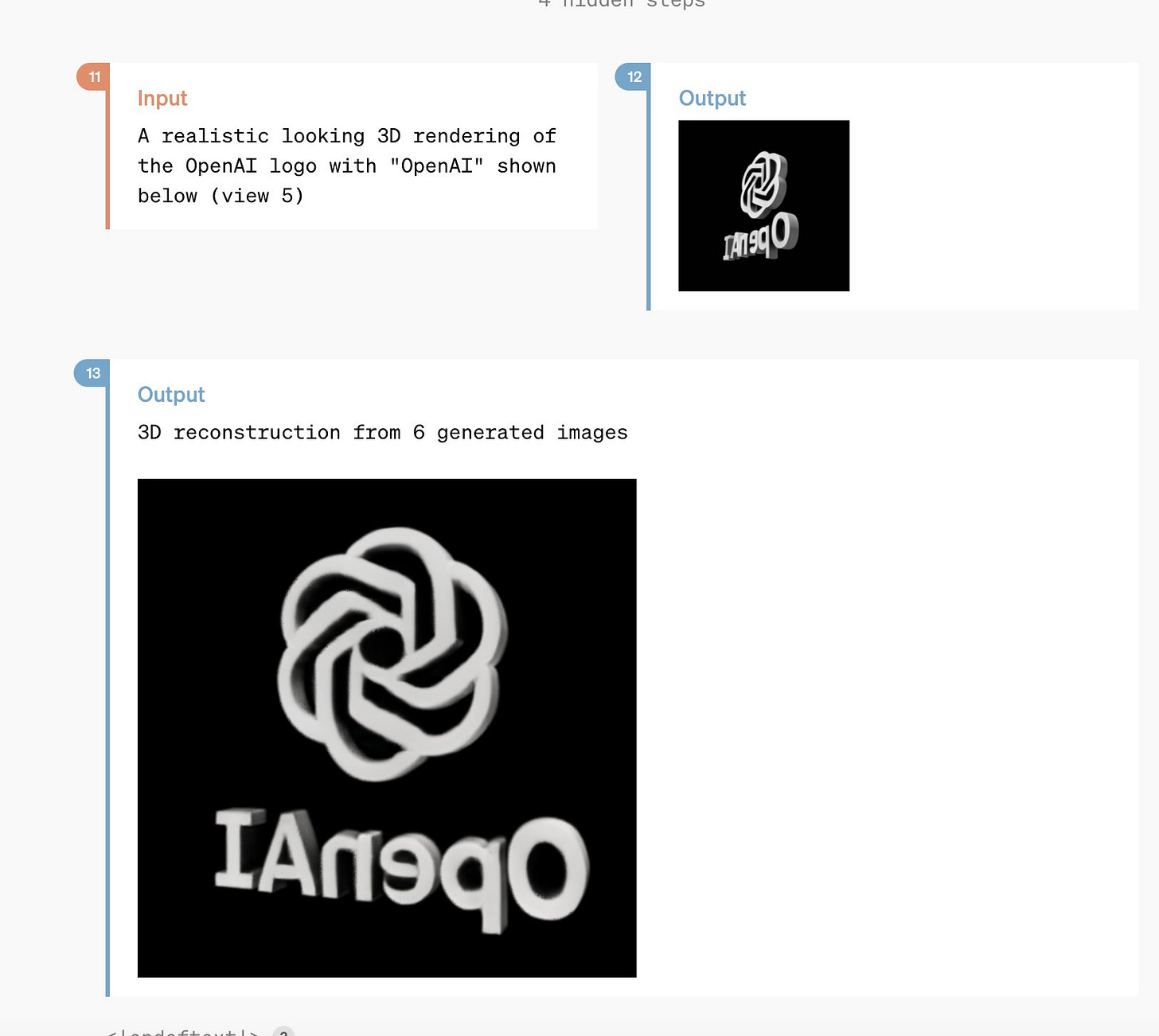

Without even mentioning it in the livestream, OpenAI casually dropped text-to-3D:

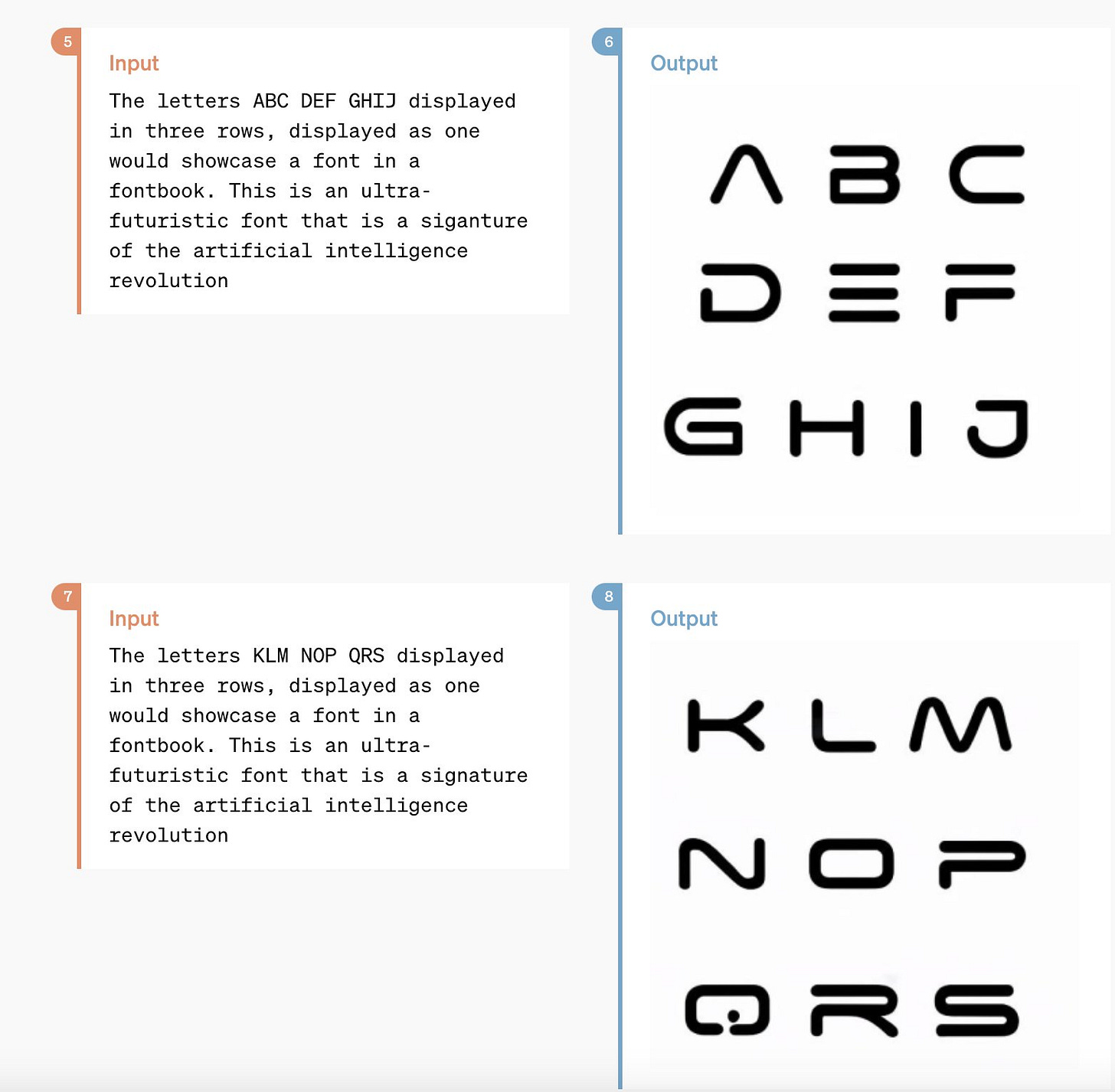

The ability to create fonts:

Multi-line texts in images, even in cursive (just a couple months ago, being able to accurately generate text in images was a difficult task):

There are still limitations

Despite the occasional errors and confusion over languages, GPT-4o can still “giggle” while talking and even slightly modify its “accent” (“sometimes I can’t help muh-self” as if speaking in character).

Does this pass the Turing Test?

The Turing Test is a notorious “measure” of a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human. If a judge/human on the other end cannot reliably tell machine from the human, the machine is said to have passed the Turing Test.

GPT-4o is clearly advancing towards these capabilities, demonstrating several key attributes:

Low Latency: a minimal delay in the AI’s responses, mimicking the natural flow of human dialogue

Full Duplex: the capability for AI to perform bi-directional communication simultaneously - the ability to listen and speak at the same time. This facilitates more natural interruptions, affirmations while the other person is speaking and simultaneous expressions of understanding/emotion

Prosody and Emotions: capturing rhythm, stress, and intonation of speech in order to convey emotions, sarcasm, questions, and other subtle conversational cues

Contextual Understanding and Memory: AI can remember past interactions and use this information to make current conversations more relevant

The new voice (and video) mode is the best computer interface I’ve ever used. It feels like AI from the movies; and it’s still a bit surprising to me that it’s real…The original ChatGPT showed a hint of what was possible with language interfaces; this new thing feels viscerally different. It is fast, smart, fun, natural, and helpful. Talking to a computer has never felt really natural for me; now it does. - Sam Altman

I guess this takes “I’m dating a model” to a whole new meaning…

Nice work, Claire!!!

Wow this is great, have a look at our Online Institute to see if you agree with anything we offer ☺️