DeepSeek R-1 Explained

A no-nonsense FAQ (for everyone drowning in DeepSeek headlines)

There is a good chance you’re exhausted by the amount of DeepSeek coverage flooding your inbox. Between the headlines and hot takes on X, it’s hard not to have questions: What is DeepSeek? Why is it special? Why is everyone freaking out? What does this mean for the AI ecosystem? Can you explain the tech? Am I allowed to use it?

Let’s break down why exactly it’s such a big deal with some straightforward FAQs:

What is DeepSeek R-1?

DeepSeek was developed by High Flyer, a Chinese quantitative hedge fund known for using AI algorithms in trading. In 2023, High Flyer expanded beyond its core business by launching an AI research lab. Last December, it made waves releasing V3: a non-reasoning AI model that matched GPT-4o and Claude 3.5 Sonnet’s performance at just 1/5th the cost. Last week, High Flyer introduced R-1, its latest reasoning model, which performs on par with OpenAI’s o1 model (previously considered the most advanced).

DeepSeek V3 and R-1 are foundation models. Think of these as the “big brains” that serve as the fundamental building blocks of modern AI systems, from chatbots to edtech applications. Major players like OpenAI, Anthropic, and Google are all locked in a high-stakes race to build the best foundation models (the critical piece of AI infrastructure that others build upon). Owning the leading model means setting the standard and becoming the go-to platform for developers and builders.

AI labs are used to matching each other step for step, what is so groundbreaking about R-1?

R-1 isn’t just as good as OpenAI’s best model in performance, it’s also 90% cheaper and nearly twice as fast. R-1 is also open-source, meaning anyone can download and run the model on their own hardware. According to their V3 report, it took two months and ~$6M to train this model using NVIDIA H800 chips. The researchers have also published detailed documentation of their development process, essentially giving away their blueprint for other researchers to build their own reasoning models.

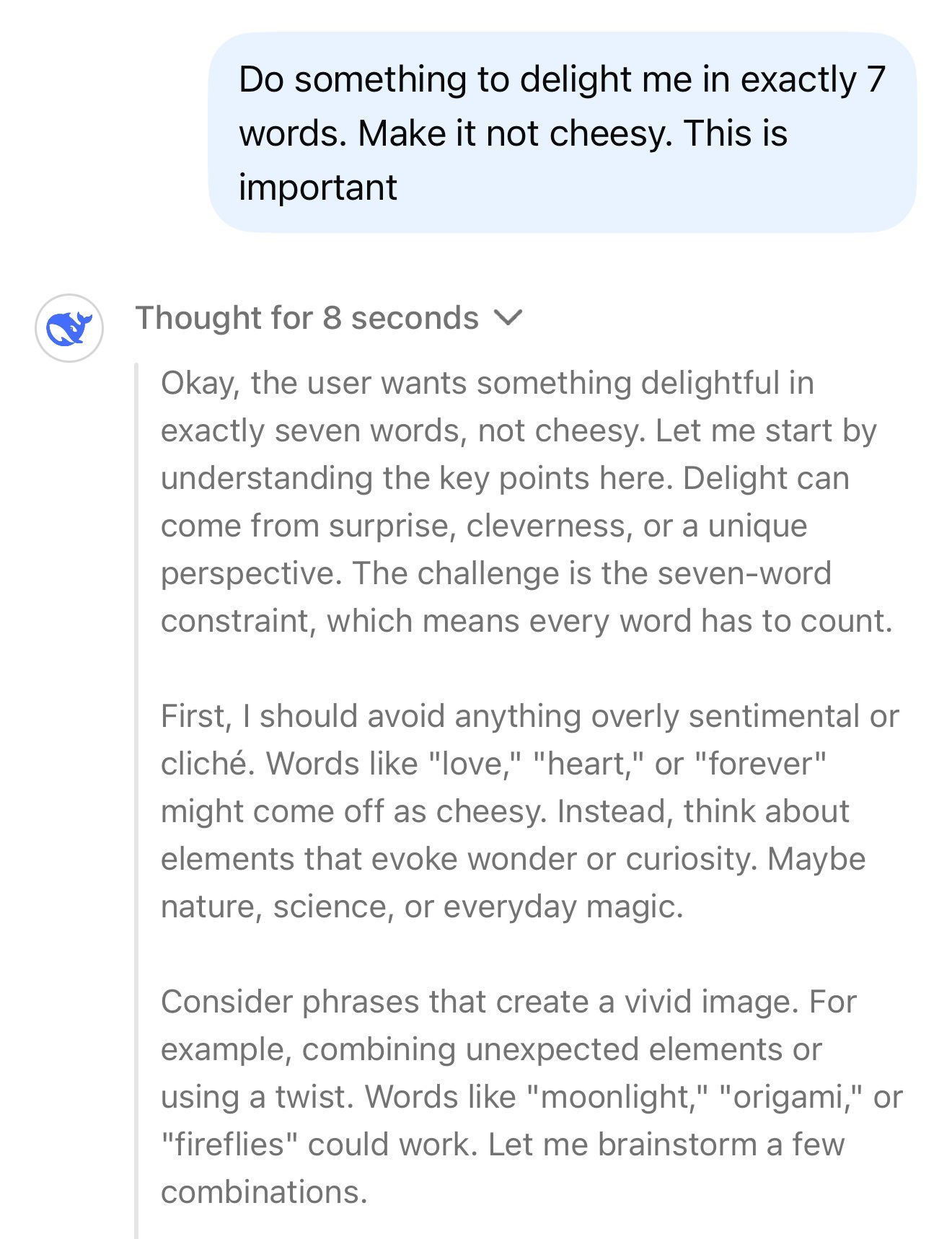

From a product standpoint, R-1 also lets you see chain-of-thought reasoning (more on this below), which lets users see how a model arrives at its conclusion. It’s a window into its reasoning process - what it knows, what it doesn’t, and how it prioritizes information. Early feedback suggests that this makes interactions with the model feel more transparent and reliable.

What has happened since it was launched?

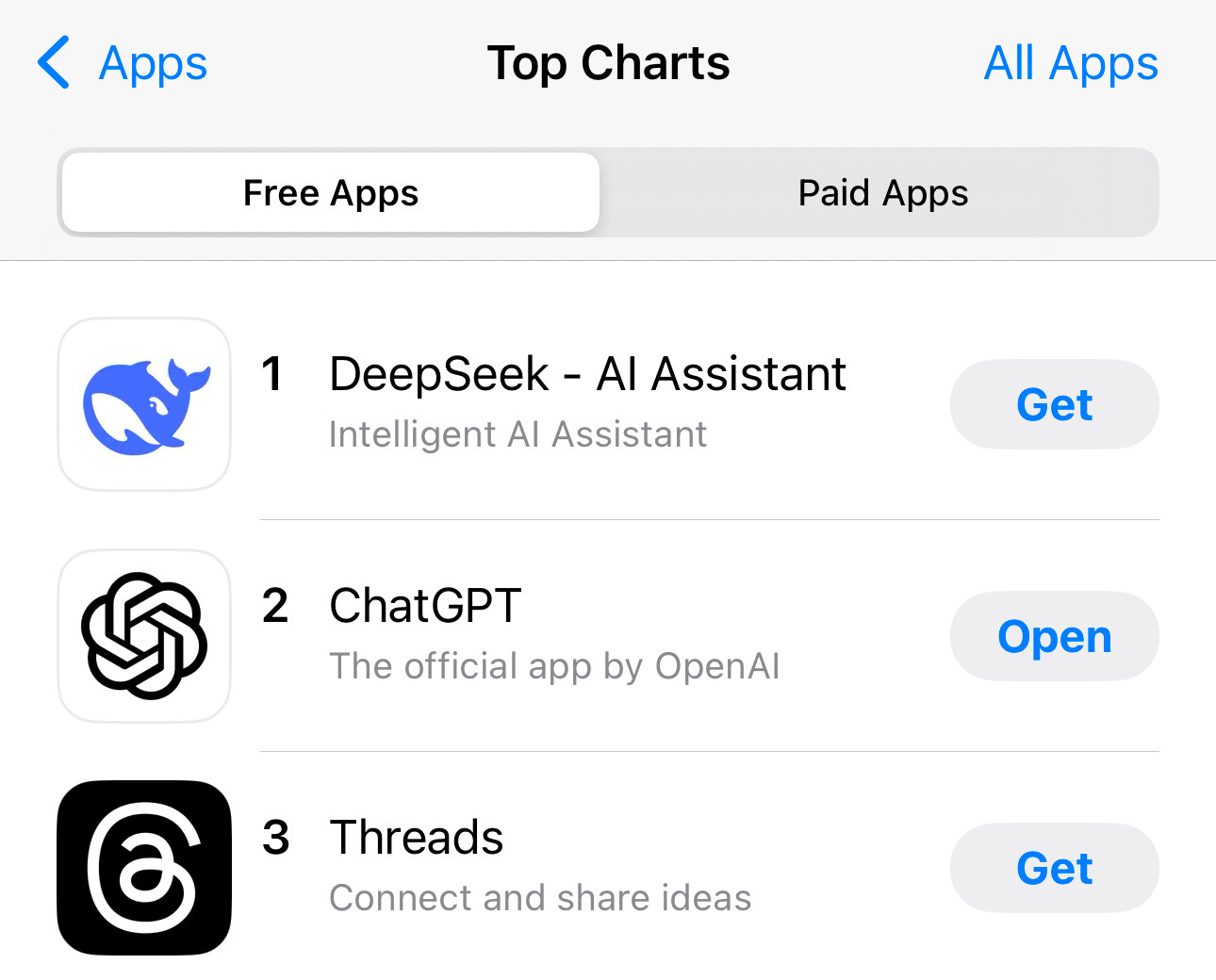

It skyrocketed to the #1 spot in the US App store and 51 other countries. By Monday morning, it saw 2.6M downloads. Over the past 7 days, DeepSeek saw nearly 300% more app downloads than Perplexity.

It triggered a $600B loss in NVIDIA’s market value, the biggest single-day drop for any one company in history, and wiped about $1.2T off the US markets by Monday.

It made the front page of the NYT, CNN, WSJ, and more. Meta is reportedly scrambling ‘war rooms’ of engineers to dissect DeepSeek. It’s drawn a response from Sam Altman. Marc Andreessen has praised the model as “a profound gift to the world” and called it AI’s “sputnik” moment.

Why is everyone freaking out?

Interestingly, DeepSeek has actually been matching industry leaders on budget efficiency for a few months now, starting with the release of their V2 model in January 2024 and V3 in December 2024. In fact, R-1 was released last Wednesday, but it initially flew under the radar outside of the AI community. It seems what really triggered the frenzy among investors and the public was the release and meteoric rise of DeepSeek’s app.

It’s important to clarify that R-1 isn’t “smarter” than earlier models, just trained far more efficiently. Like other current generation AI models, it still struggles with the same issues around hallucinations and reliability. R-1 itself hasn’t necessarily moved the needle on the grand promise of AGI, but it has undeniably sparked an economic and geopolitical reckoning.

R-1 underscores the escalating stakes in the US-China AI arms race, but it’s also significant because it challenges one of the industry’s long-held assumptions: that building foundation models is a game reserved exclusively for players with billions to spend in CAPEX, that pouring billions into compute and relentlessly expanding infrastructure is the only path to progress. This has been the prevailing narrative for industry heavyweights (OpenAI, Anthropic, Google) and has underpinned astronomical fundraising efforts, including last week’s announcement of the $500B Stargate Project.

Scaling at all costs may not be the best (or even necessary!) approach to advancing AI capabilities. R-1 showed that it’s now possible to develop state-of-the-art AI models without staggering budgets, enormous data centers, or massive compute resources.

What does it actually mean for the AI ecosystem?

The tech giants will waste no time trying to replicate and improve upon these results. We can expect to see a wave of similar optimizations, which ideally will translate to more options for AI models, greater innovation, and further cost reductions. As we’ve seen, it only takes a few months (or even weeks) for Google, OpenAI, Anthropic, Meta, and now DeepSeek, to trade spots on the leaderboard.

The reevaluation of substantial investments into infrastructure and CAPEX has naturally led to NVIDIA being one of the most impacted by the broader tech sector selloff. Some are calling this a potential “extinction-level event” for venture capital firms that went all-in on closed foundation model companies like OpenAI and Anthropic, as their once-proprietary advantages start to erode. Others see it as an “exponential event” for startups: a shift from concentrated power among a few frontier model providers to a thriving ecosystem where, as Gary Tan puts it, “a thousand flowers will bloom”.

As foundation models become more commoditized, the cost of intelligence will continue to drop. The value proposition of AI will no longer hinge on the models themselves, but on how effectively they are applied to solve real-world problems. The real winners will be those who build at the application layer — products that seamlessly integrate AI into workflows, leverage unique data flywheels, and deliver meaningful value to users.

This will fundamentally improve startup economics. Products will become smarter, faster, and cheaper to build and operate. While headlines might suggest that NVIDIA and AI infrastructure spending are the clear losers in this scenario, the reality could be more nuanced — see Jevons’ paradox: as a resource becomes cheaper and more efficient to use, its consumption skyrockets.

Cheap AI won’t dampen demand - it will only make AI even more ubiquitous, powering everything from your iPhone’s Apple Intelligence to your smart refrigerator to your self-driving car. When everyone has access to the same powerful models, the real differentiator will be deeply understanding user problems and delivering tailored solutions.

Can you explain the tech?

You mentioned that DeepSeek’s R-1 and OpenAI’s o3 are “reasoning models”. Why are these different?

We’ve been witnessing a rise in a new category of AI models, known as “reasoning models”. OpenAI’s o1 and o3 are some of the more well-known examples.

These reasoning models have a more sophisticated approach to solving problems, particularly by employing internal reasoning processes that mirror human trains-of-thought. Traditional LLMs typically focus on predicting the next word and generating responses immediately. Reasoning models differ by using:

Test-Time Compute (“thinking time”): These models intentionally take longer to process a question before generating an answer, often taking minutes to work through a problem step-by-step.

Multi-Step Reasoning: Instead of trying to answer a question all at once, reasoning models break down problems into smaller steps and evaluate different approaches before reaching a final conclusion. This is helpful for mathematical proofs or multi-layered coding challenges.

Visible Chain-of-Thought: Reasoning models often provide a transparent “chain of thought”, showing users how they exactly arrived at conclusions. This could be game-changing for regulatory compliance and helps users identify where mistakes might occur — much like being able to see scratch work before the final answer.

Self-Correction: Reasoning models can also evalute their own answers, detect errors, and fix them during the reasoning process. This further adds a layer of reliability, particularly for tasks that require precision.

How did DeepSeek cut costs and boost efficiency?

DeepSeek V3 reportedly cost just $5.6M to develop — a fraction of the $100M+ that was needed for OpenAI’s GPT-4. This incredible cost efficiency is due to a combination of resource constraints and innovative techniques.

Barred from accessing NVIDIA’s most powerful chips due to US sanctions, DeepSeek researchers were forced to find creative ways to train and operate AI models on less powerful hardware, specifically NVIDIA’s H800 chips. These constraints led to breakthroughs in how they optimized memory, computing power, and training techniques.

Here is a simplified breakdown of a few of the technical techniques that they employed:

Reinforcement Learning (RL): DeepSeek used reinforcement learning instead of the traditional supervised learning approach. Instead of showing the model thousands of labeled examples, they allowed it to learn from its own mistakes. The model generated answers, “checked” whether they were correct, and improved based on feedback. This trial-and-error approach enabled the model to develop reasoning skills on its own. It’s worth noting that the model began spending more time thinking when faced with complex problems, an emergent behavior that wasn’t explicitly programmed. This is the same technique that OpenAI used to train o1.

Mixture of Experts (MOE): They employed a neural network architecture that splits the workload among smaller sub-models (or “experts”) specialized in different tasks. Instead of using the entire model for every task (like GPT-3.5), only the most relevant experts are activated for a given input. For example, if the input involves math, the “math experts” are activated. This means the model can focus its computational power where it’s needed most. DeepSeek’s model includes 671 billion parameters, but only activates 37B active parameters at any time for a task.

Non-technical analogy: This is like having a hospital with hundreds of specialists (cardiologists, neurologists, surgeons, etc). When a patient comes in, instead of having every single doctor in the hospital examine the patient (which would take a lot of time and effort), R-1 has only the most relevant specialists step in to help.

Multi-Head Latent Attention (MLA): To decide which “experts” to activate for any given task, DeepSeek introduced MLA. This mechanism acts like a decision-making system, intelligently routing tasks to the right “experts”. This improves the accuracy and efficacy of the routing process, thereby reducing wasted computation and enhances its abilty to handle complex tasks.

Non-technical analogy: MLA is like an intelligent triage nurse who evaluates every incoming patient and their needs, then quickly routes patients to the right specialists.

Auxiliary-Loss-Free Load Balancing: DeepSeek also tackled the challenge of distributing tasks evenly across the experts. This system ensures that some experts don’t get overwhelmed while others remain idle. By balancing the compute load, the model operates more efficiently, avoiding bottlenecks during training and inference.

Non-technical analogy: In a busy hospital, ER doctors might get overwhelmed while other specialists are underutilized. This system acts as a a smart scheduler who ensures that every doctor gets an even workload, which allows the entire hospital to run more smoothly.

Want an even more technical breakdown? I found these in-depth explainers from Nathan Lambert and Zvi Mowshowitz extremely helpful.

Caveat: while the $5.6M figure has captured a lot of attention, it’s important to recognize that this doesn’t necessarily reflect the full cost of developing frontier AI models like DeepSeek V3 or R1. The $5.6M number likely represents the marginal cost of the final training run and excludes fixed costs like infrastructure and personnel. Running costs for compute and operations are likely hundreds of millions of dollars per year. Nonetheless, the $5.6M number is indicative of how an efficiency-focused approach allows DeepSeek to punch above their weight.

Can I try it?

Yes, the chatbot is available through their website and mobile apps (iOS and Android), at no cost. They have recently limited new sign-ups due to cyber attacks. If you do use it, it’s important to note that DeepSeek’s privacy policies allow them to (1) send data back to China and (2) use that data to train future models (the latter is not totally uncommon for frontier model providers). As with most Chinese AI models, DeepSeek does censor content critical of China or its policies.

For developers or founders, DeepSeek has open-sourced their core models under the MIT license, meaning anyone can download and modify it. If you choose to install DeepSeek’s models locally and run them on your own hardware, you can interact with them privately, ensuring your data does not leave your system or go to DeepSeek.

If you prefer to use their APIs, the pricing is highly competitive: $0.55 per million input tokens and $2.19 per million output tokens. In comparison, OpenAI’s o1 API costs $15 per million input tokens and $60 per million output tokens. OpenAI’s o3 model on high-compute mode can cost as much as $1,000 per task.

Have questions or thoughts to share? We’re in an exciting era of AI + Education, and we’d love to discuss it further. You can leave a comment or reach me at claire@gsv.com.

Was really looking forward to someone I trust write something about it. Needed to read something that didnt seem like PR/propoganda. Thank you for the time and effort in putting this together Claire.

Akhil Kishore

GIA ADVISORS

New Delhi

PS: Are you coming to India for the ASU+GSV conference?

Thank you