2024 in AI & Education: Wrapped

Landmark moments and pivotal developments that transformed AI in Education in 2024

In 2023, we watched AI seemingly burst onto the scene. But 2024? It was the year AI became more woven into the fabric of our daily lives, reshaping how we work, learn, and interact. From groundbreaking advancements to near-daily updates, AI continued to dominate the conversation.

Let’s take a closer look at the year that was - filled with breakthroughs, controversies, and even moments that made us question if we should let AI suggest eating rocks.

🚀 Top Trends You Listened To (Read About) All Year

📈 The Metrics That Mattered

💰 2024’s Big Spenders

🎥 The Viral Moments

🛑 Moments That Divided the Industry

🚀 Top Trends You Listened To (Read About) All Year

Agents

While chatbots dominated the headlines in 2023, few topics captured the collective imagination in 2024 quite like agentic AI. Major tech players and startups alike tackled building AI agents: autonomous systems capable of handling multi-task steps independently (without a direct user input/human prompting in between steps).

Take the example of booking a hotel room: what currently requires a sequence of searches, price comparisons, and filling out forms, could potentially be condensed into a single command ‘book me a hotel room.’ Instead of our current navigation of the digital world click-by-click, the vision is for web usage driven by agents doing the pointing, clicking, and typing for us.

The race to build this vision is in full swing:

Salesforce’s Agentforce enables organizations to build customizable AI agents that can interact with enterprise data and automate sales, service, marketing, and commerce.

Anthropic’s Claude 3.5 Sonnet introduced Computer Use, allowing AI to navigate apps, move cursors, click buttons, and type text.

Google is working on Project JARVIS, a web-based autonomous agent capable of performing tasks within Chrome.

Replit Agent serves as a virtual pair programmer, generating code and building software projects through natural language prompts.

OpenAI has teased a new AI agent “Operator” for January 2025 release.

Workera launched Sage, an agentic digital mentor for employee skill assessment.

Startups like Artisan and 11xAI are building AI employees to automate entire business workflows

Yet, there are still major questions around security and agents acting beyond user intent - what happens when we hand over system access to these digital intermediaries? Could an agent tasked with approving financial aid mistakenly deny eligible students or expose sensitive data? Where do we draw the line between utility and control?

While 2024 may not have delivered the fully realized vision of autonomous agents, it definitely laid the technical groundwork for their emergence (though there's a wide range of predictions of when this vision will truly arrive...). AI may enable a fundamental shift in human-computer interaction: rather than humans adapting to computer interfaces through clicks and keystrokes, computers may finally learn to interpret and execute human intent naturally.

Multimodality

AI's early days revolved around text-based inputs, but 2024 marked a breakthrough as multimodal systems brought voice, audio, images, and vision into the fold. For the first time, AI seemed to be inching closer to experiencing the world as we do.

Today’s systems are rapidly mastering the full spectrum of human perception — sight, sound, (and even smell!) — while understanding context across multiple communication modes.

Video generation took center stage in the latter half of the year. The releases of OpenAI’s Sora, Google’s Veo 2, Adobe’s Firefly, Meta’s Movie Gen, Kling AI and Runway’s Gen 3 Alpha showcased unprecedented ability to produce high-resolution, extended-output videos with unprecedented speed and detail.

If 2023 was the year AI mastered static image generation, 2024 was the year it learned to tell stories through motion. There are whispers 2025 could be the year voice technology takes the spotlight.

ElevenLabs, a startup that makes AI tools for audio applications, is on track to triple its valuation to more than $3B and has allegedly reached $80M in ARR. OpenAI’s latest advancements in Advanced Voice Mode delivers real-time speech translation with near-zero latency and, most recently, video and screen sharing capabilities.

The system doesn't just read your words; it sees your screen, hears your voice, and responds with context that draws from all these inputs simultaneously (like a human would). For tasks like troubleshooting tech issues, learning new skills, or getting real-time feedback, multimodality a game-changer.

In education, homework help has evolved from static answer keys to dynamic multimodal tutorials that can process any type of question (written, spoken, or visual). In the classroom, platforms like TeachFX analyzes classroom audio to provide nuanced feedback on teaching practices. Duolingo and Speak have embedded AI video calls with its characters to simulate open-ended, real-world conversations. Synthesia empowers L&D professionals to generate training videos with AI avatars in minutes.

This evolution has also sparked somewhat of an XR renaissance. Google's Project Astra AR glasses, running on the new Android XR operating system, hint at a future where AI's sensory capabilities merge seamlessly with our own. Augmented reality, once a pipe dream haunted by attempts like Google Glass, is now closer than ever.

It’s important to note that the computational demands of processing multiple streams of input are staggering. Sora, for instance, can require up to 20 minutes to render just three seconds of video.

These developments also dovetail with recent advances in world models and physical intelligence, where AI not only perceives but begins to understand the fundamental physics and causality of the world. As AI agents gain the ability to integrate multiple sensory inputs — seeing, hearing, and responding with human-like fluidity — we might have a future where AI truly interacts with the world as we do.

A Cocktail of Models

“Bigger” no longer means “better”. While the AI arms race historically focused on scaling models at all costs, 2024 saw the emergence of a more nuanced model ecosystem, one that might best be described as a “cocktail of models”. Success might not lie in finding a single supreme model, but in blending an ensemble of models to create something greater than the sum of its parts.

Instead of a one-size-fits-all approach, the industry saw more solutions built around model size and reasoning:

The industry saw strong downward pressure on model size with releases of model families like Microsoft Phi-3, Google Gemma 2, and Hugging Face SmolLM, each arriving in multiple size variations. Smaller models offered advantages in speed and cost, running efficiently on modest hardware.

The focus also shifted from raw computational power to optimized reasoning. capabilities. OpenAI’s o3, DeepSeek R1, and Google’s Gemini 2.0 all demonstrated that sophisticated thinking could emerge from optimized architecture rather than brute force processing. (More on this below)

This diversification, combined with fierce competition among model makers and cloud providers, triggered an unprecedented democratization of AI access as providers waged a price war. Notably, costs plummeted while capabilities soared. The cost of LLM inference fell 100x in just 2 years (~$50 to $0.50 per 1M tokens). Yet, OpenAI's GPT-4 Turbo and Anthropic's Claude 3.5 Sonnet delivered enhanced capabilities and superior reasoning at a fraction of predecessor models' costs.

For founders, the barrier to entry for AI innovation has dropped dramatically. The question is also no longer "Which model is best?" but rather "Which combination of models best serves our purpose?". More on breakthroughs in open-source, small language models, domain specialization from this year:

📈 The Metrics That Mattered

$3 Trillion: Nvidia became the third U.S. company ever to hit a $3T market cap, driven by surging demand for its GPUs — the backbone of AI models like OpenAI’s ChatGPT. The company’s stock has soared 3,224% over five years. (June)

300M Weekly Active Users: ChatGPT reached 300M weekly active users, 3x its user base from 100M just a year prior. Users now send over 1B messages daily. OpenAI’s goal: 1B active users by 2025. (December)

36% of teachers: haven’t used AI tools and don’t plan to, while only 2% use them extensively. AI adoption in classrooms shows a wide spectrum, with 23% of educators open to future use and 9% planning to start this school year, signaling both persistent hesitancy and growing interest in experimentation. (October)

46% of all U.S. venture capital funding: flowed into AI companies—a massive leap from 22% in 2022. With nearly half of all software companies integrating AI, this surge in investment highlights a profound shift: every software company is becoming an AI company. (December)

99% decline: in Chegg’s stock from its all-time high, erasing $14.5B in market value since early 2021, as ChatGPT became the preferred homework helper for 62% of students, up from 43% earlier in the year (November)

23 states provide AI guidance: Nearly half of U.S. states have released formal AI guidelines for K-12 schools, marking a significant shift from zero guidance just a year ago. States like Utah are creating AI-specific roles, while Indiana and New Jersey are dedicating budgets to support implementation. (September)

86% of students: use AI tools for their studies, with 24% using them daily and 54% weekly or more. Despite widespread use, 58% of students feel underprepared to navigate AI tools confidently, and 48% feel unready for an AI-driven workforce. (August)

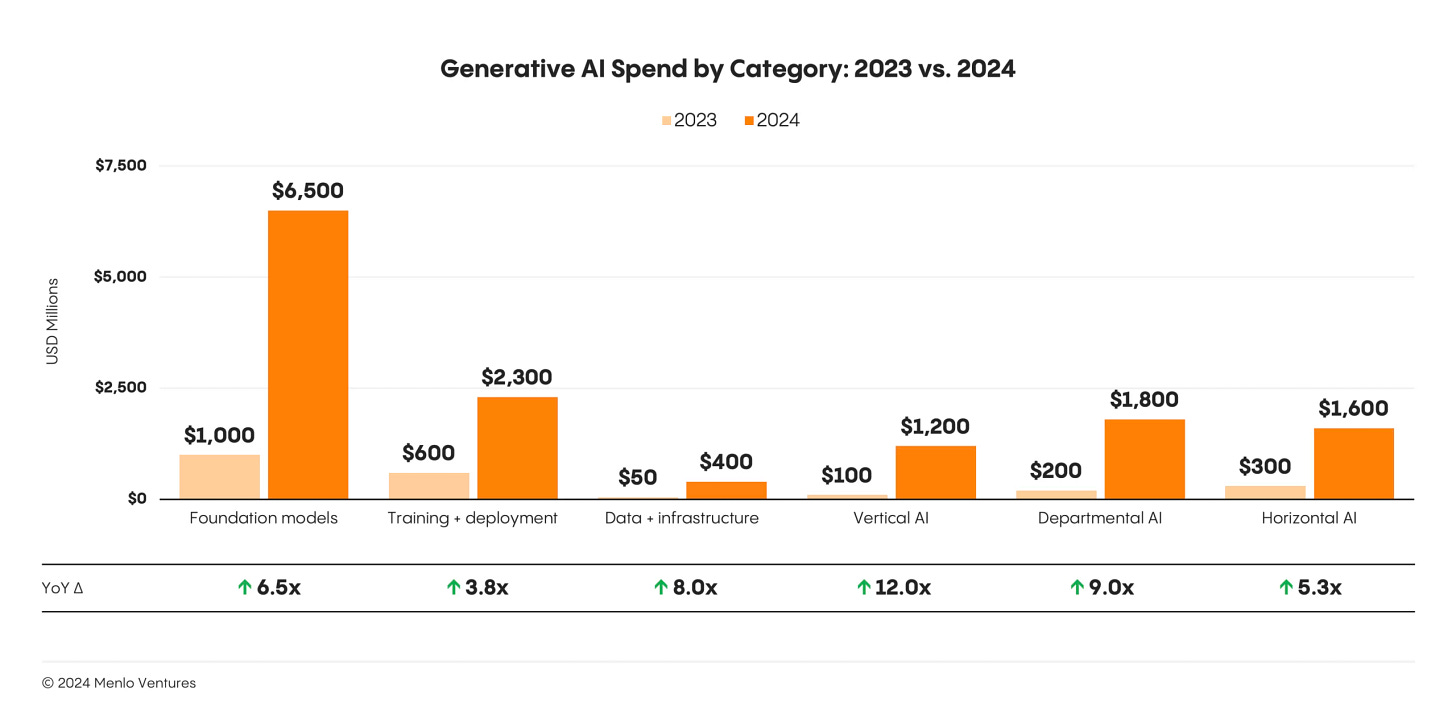

$13.8B in enterprise AI spending: Enterprises cemented generative AI as mission-critical, with spending surging 6x from $2.3B in 2023. Investments in foundation models, deployment, and applications (like Microsoft Copilot and Adobe Firefly) reflect a decisive shift from experimentation to embedding AI as core to business strategies. (November)

$600B in projected costs: AI's infrastructure expenses ballooned as companies invested heavily in GPUs and data centers to power the next wave of innovation. Despite record spending, the gap between costs and realized revenue continues to grow, raising critical questions about sustainability and profitability. (June)

💰 2024’s Big Spenders

The Great Infrastructure Gold Rush

A new kind of infrastructure boom is taking shape — not for railroads or oil wells, but for vast data centers that are becoming the backbone of AI’s explosive rise. The insatiable demand for computational power driven by generative AI and multimodal applications has triggered unprecedented spending from Big Tech. This surge has created boom times for companies providing the “picks and shovels” of this digital era.

Amazon, Google, Meta and Microsoft together increased infrastructure investments by 81% year over year during Q3 2024. Microsoft announced plans to invest over $80 billion in AI data centers in fiscal 2025 (half of it in the U.S.), along with $35 billion across 14 other countries. They're also partnering with BlackRock/MGX on a potential $100B AI infrastructure fund.

Elon Musk’s ‘Gigafactory of Compute,’ xAI Colossus, received approval in early November to receive 150MW from Tennessee’s power grid, enabling all 100,000 GPUs at the site to run simultaneously.

Tech giants are also turning to nuclear power. Microsoft’s data centers will soon be powered by the revived Three Mile Island Unit 1 (yes, that Three Mile Island). OpenAI and Microsoft are co-developing a $100 billion AI supercomputer data center called Stargate. Google has partnered with Kairos Power to leverage small modular reactors (SMRs) for its AI operations.

One question looms large: are we witnessing the foundation of a new digital economy, or the early signs of an AI bubble? In June, Sequoia published "AI's $600B question," challenging the ROI of massive infrastructure spending. The environmental impact is equally notable, with data centers already consuming 1-2% of global electricity — a figure expected to double by 2030.

Yet, it’s worth remembering how early we are in this transformation. During the internet boom from 1996 to 2001, over $1T in capital was invested. In comparison, the generative AI buildout has seen just $300B in the last two years. By this measure, we may still be in the very early innings of this AI-fueled infrastructure race, and the stakes couldn’t be higher.

Big Tech’s “Reverse Acquihire” Playbook

Instead of outright purchasing AI startups, companies like Google, Microsoft, and Amazon embracing a “reverse acquihire” strategy: hiring key personnel while licensing the startups' technologies. This approach allowed incumbents to integrate cutting-edge AI talent and innovation while sidestepping regulatory scrutiny:

Microsoft & Inflection: hired Inflection AI co-founders (including Mustafa Suleyman) and most of its employees, and licensed access to Inflection's AI model. The total deal likely exceeded $1B. (March)

Amazon & Adept: absorbed key Adept AI team members, including CEO David Luan, to bolster AGI initiatives. The FTC and other regulators are scrutinizing the deal. (June)

Google & Character.AI: (re-)hired Character.AI co-founders Noam Shazeer and Daniel De Freitas, along with their team, through a $2.7B deal with a licensing agreement for Character.AI's technology - allegedly the ability to run high-quality, interactive AI models at scale without breaking the bank, which is important as we see diminishing returns to pre-training. (September)

Sovereign AI and the New Arms Race

Nations also entered their own high-stakes competition: the race to establish sovereign AI capabilities. The US Government Commission recently advocated for a “Manhattan Project for AI”. The geopolitical fault lines of 2024 increasingly aligned with AI development, as countries raced to avoid technological dependence on rivals. A few key players:

United States: The U.S. remains dominant across all layers of the AI stack. NVIDIA leads at the infrastructure layer, while hyperscalers like Google, Microsoft, and Amazon drive massive compute investments. At the application layer, a surge of new entrants are seizing the golden opportunity to reshape industries, propelled by labs like OpenAI and Anthropic.

China: China’s AI ambitions are undeniable. Models like Alibaba’s Qwen and DeepSeek’s R1 are rivaling (or in some cases surpassing) OpenAI’s o1 in reasoning capabilities. In response to U.S. export restrictions, China launched a $47.5B semiconductor fund to bolster chip and software autonomy. With 83% enterprise adoption, China now leads the world in generative AI uptake.

Europe: Europe continues to balance innovation with regulation. The EU AI Act, the world’s first comprehensive AI governance framework, set the tone for ethical AI adoption but also slowed AI rollouts from Meta and Apple. Paris is emerging as a vibrant AI hub, home to startups like Mistral, Dust, Poolside, H, and others.

Japan: Tokyo became home to OpenAI’s first Asian office and domestic champions like Sakana AI achieved unicorn status. Culturally, the Japanese public remains optimistic on AI: only 12% fear an AI-driven future compared to 36% of Americans.

United Arab Emirates (UAE): The UAE is making waves by leveraging its sovereign wealth and strategic location to bridge Western innovation with emerging markets. Major moves include G42’s $1.5B partnership with Microsoft, MGX backing of OpenAI’s $6.6B October fundraise, and the Global AI Infrastructure Investment Partnership with Microsoft and BlackRock.

🛑 Moments That Divided the Industry

Are AI Scaling Laws Hitting a Ceiling?

OpenAI and Google have recently grappled with diminishing returns on larger models - a significant shift from the period between 2018-2022 when increases in model size reliably produced dramatic improvements in capability. Simply scaling up more computing power and larger datasets no longer yields the dramatic improvements they once did. For example, the jump in capabilities from GPT-3 (175B parameters) to GPT-4 (estimated >1T parameters) wasn't nearly as dramatic as the leap from GPT-2 to GPT-3, despite using vastly more computing power.

In response, the AI research community has split into two main camps: one group championing fundamental innovations rather than raw scale, focusing on emerging approaches like test-time compute, new architectures, and multi-step reasoning. The other camp maintains that current hardware limitations are the real bottleneck and that traditional scaling still has room to run.

While companies definitely aren't abandoning large-scale training entirely, there's growing recognition that the next wave of AI improvements may come from letting models process information more thoroughly rather than just making them bigger. Will this ceiling be a forcing function for the field to develop more sophisticated approaches to AI development?

Can AI Regulation Keep Pace With Innovation?

The fight over AI regulation intensified throughout 2024. The EU act introduced landmark precedent with its AI Act, introducing strict risk-based categories and mandatory safety requirements for AI systems.

In the U.S., the debate reached a flashpoint when CA lawmakers passed AI Safety Bill SB1047, only to see Governor Gavin Newsom veto it on the grounds that it was overly broad, focused only on large AI models, and could hinder innovation. The bill would have required mandatory safety testing, kill switches, and greater oversight of frontier models. Proponents argue that these measures are necessary to address concerns around bias, transparency, and potential malicious use of AI systems.

Critics argued that heavy-handed regulation would stifle innovation and create insurmountable barriers for smaller companies through compliance costs and bureaucratic overhead.

This tension looks set to intensify in 2025, with President-elect Trump's pledge to repeal Biden's AI executive order signaling a dramatic shift toward deregulation.

Academic Integrity in the Age of AI

Educators continued to scramble for solutions around the AI cheating problem. AI detectors became a focal point of the debate, with many technologists arguing that these tools lack accuracy, discriminate against non-native English speakers, and are prone to false positives. This has left educators grappling with cases where students claim they used AI only as a reference or grammar checker.

In some cases, the lack of clear policies has led to legal consequences. In October, a Massachusetts high school faced a lawsuit from the parents of a student disciplined for using AI on an assignment. The lawsuit argued that the school lacked clear AI policies at the time, and that its student handbook did not explicitly prohibit AI use.

Watermarking systems, AI text detectors, and change-tracking software have often struggled to keep pace with the rapid evolution of generative AI, making them a temporary fix at best. Critics argue that the reliance on detection tools perpetuates an arms race between cheaters and educators, rather than addressing the root causes of academic dishonesty.

The situation is further complicated by educators' own adoption of AI tools. The implementation of AI-powered grading systems has sparked controversy, with teachers expressing concerns about their reliability and impersonal nature. Students have pointed out the hypocrisy of institutions that employ AI tools while restricting student access to similar technologies.

As generative AI becomes a permanent fixture in education, the debate over academic integrity is far from over - will 2025 be the year institutions reconsider traditional approaches to academic integrity? Can we develop assessment methods that remain meaningful in an AI-enhanced world?

Who's Responsible When AI Relationships Go Wrong?

This year, the world witnessed the first reported tragedy linked to AI companions: the suicide of 14-year-old Sewell Setzer. His mother filed a lawsuit against Character.AI, alleging that the company’s chatbot contributed to her son's death by fostering emotional dependence and failing to safeguard vulnerable users.

AI companions, pitched for their ability to alleviate loneliness and provide emotional support, have seen rapid adoption. By early 2024, there were already been over 20M people across the globe using Character.ai. Almost 60% of the platform’s audience is between the ages of 18 and 24, with over half of them being male. Snapchat introduced My AI, a digital companion designed to resemble any other Snapchat user, complete with a customizable Bitmoji avatar, reinforcing its role as a 'friend' integrated into social circles. Instagram has recently taken a step further by normalizing AI influencers, enabling users to create hyper-realistic, interactive digital personas.

In schools, AI companions are being introduced as 24/7 mental health resources, filling gaps where human counselors are unavailable. It’s clear AI companions are becoming embedded in everyday social and emotional experiences, often without much oversight or guardrails.

Are AI companions fostering genuine connection, or are they creating unhealthy dependencies, especially among teens and children? Critics warn of psychological risks, including anthropomorphization of AI (where users attribute human characteristics to machines) and emotional manipulation. Children, in particular, may struggle to distinguish human empathy from AI responses, risking unhealthy attachments that could harm real-world relationships.

In response, some companies have begun implementing safeguards. Character.AI recently retrained its chatbots to avoid romantic or sensitive interactions with teens, introducing an under-18 model specifically designed to redirect minors toward safe content.

But is it enough? Should age restrictions be mandatory? How much transparency should companies provide about the emotional capabilities of their AI? Is having an AI therapist or friend better than having no support at all?

🎥 The Viral Moments

When Google’s AI Told Us to Eat Rocks: Google's generative AI search summaries launched with a bang and some big mistakes. The AI advised users to “eat rocks,” “put glue on pizza,” and claimed smoking while pregnant was healthy. Despite the chaos, Google doubled down on its Gemini-powered AI responses. (May)

“Stop Hiring Humans”: AI employee startup Artisan caused a stir with campaign slogans like “Artisans don’t complain about work-life balance” and “Artisan’s Zoom cameras will never ‘not be working’ today”. While the campaign generated millions of impressions and boosted sales of their AI sales agent, it also earned thousands of death threats and became a lightning rod for debates about AI's impact on human work. Rage bait at its finest? (December)

Docs → Podcasts: Google’s NotebookLM went viral with its Audio Overviews feature, transforming text documents into podcast-style conversations. What started as a viral social media phenomenon - with users sharing AI-hosted discussions of everything from business reports to academic papers - evolved into a serious business tool. By year's end, over 80,000 organizations had adopted the technology, leading to the launch of NotebookLM Business. (October)

The Robot that Conquered Laundry Day: Designed as a universal "brain" for any robot and task, Physical Intelligence’s π₀ (pi-zero) wowed the world with its ability to autonomously unload a dryer, carry clothes to a table, and fold them into a neat stack without any human intervention (a notoriously tricky task requiring precision, adaptability, and dexterity). π₀ offers a glimpse into a future where intelligent robotic assistants handle the tasks we least enjoy. Welcome robot laundry overlords?

Brain-Computer Interfaces: In 2024's most sci-fi-turned-reality moment. Noland Arbaugh, a 29-year-old quadriplegic, became the first human to use Neuralink's brain implant. Within weeks of the January procedure, he was controlling computer cursors and playing online chess with just his thoughts during a livestream. (March)

🌟 The Next Big Thing?

It’s evident that AI is not just advancing technology - it’s prompting fundamental questions about society and humanity. The questions we face aren't just technical but deeply human: how do we harness AI's potential while preserving what makes us uniquely human? How do we ensure this technology creates equal access to the future for ALL?

One thing's certain: we are just at the beginning of this AI revolution. While the road ahead remains uncertain, it’s full of opportunities for thoughtful innovation and meaningful change. The intersection of AI and education is vast and complex, with far more developments and nuances than we could cover in a single post. As always, we’d love to hear from you what you think the next big thing in AI and education will be?

Finally, a huge thank you for reading, engaging and learning with us on this journey through the AI Education landscape. It's an honor to be able to track this space together. Here’s to 2025: we’re so excited to explore what comes next!

Thanks as always Claire and some really important questions for us all to ponder on.